Bridges-2 User Guide

Bridges-2 User Guide

We take security very seriously. Please take a minute now to read PSC policies on passwords, security guidelines, resource use, and privacy. You are expected to comply with these policies at all times when using PSC systems. If you have questions at any time, you can send email to help@psc.edu.

Are you new to HPC?

If you are new to high performance computing, please read Getting Started with HPC before you begin your research on Bridges-2. It explains HPC concepts which may be unfamiliar. You can also check the Introduction to Unix or the Glossary for quick definitions of terms that may be new to you.

We hope that that information along with the Bridges-2 User Guide will have you diving into your work on Bridges-2. But if you have any questions, don’t hesitate to email us for help at help@psc.edu.

Questions?

PSC support is here to help you get your research started and keep it on track. If you have questions at any time, you can send email to help@psc.edu.

Before you can connect to Bridges-2, you must have a PSC password.

If you have an active allocation on any other PSC system

PSC usernames and passwords are the same across all PSC systems. You will use the same username and password on Bridges-2 as for your other PSC allocation.

If you do not have an active allocation on any other PSC system:

You must create a PSC password. Go to the web-based PSC password change utility at apr.psc.edu to set your PSC password.

PSC password policies

Computer security depends heavily on maintaining secrecy of passwords.

PSC uses Kerberos authentication on all its production systems, and your PSC password (also known as your Kerberos password) is the same on all PSC machines.

Set your initial PSC password

When you receive a PSC account, go to the web-based PSC password change utility to set your password. For security, you should use a unique password for your PSC account, not one that you use for other sites.

Change your PSC password

Changing your password changes it on all PSC systems. To change your Kerberos password, use the web-based PSC password change utility .

PSC password requirements

Your password must:

- be at least eight characters long

- contain characters from at least three of the following groups:

- lower-case letters

- upper-case letters

- digits

- special characters, excluding apostrophes (') and quotes (")

- be different from the last three PSC passwords you have used

- not be re-used on other accounts

- remain private; it must not be shared with anyone

- be changed at least once per year

Password safety

Under NO circumstances does PSC reveal any passwords over the telephone, FAX them to any location, send them through email, set them to a requested string, or perform any other action that could reveal a password.

If someone claiming to represent PSC contacts you and requests information that in any manner would reveal a password, be assured that the request is invalid and do NOT comply.

It is strongly recommended to use a Password Manager to aid in generating secure passwords and managing accounts.

PSC policies regarding privacy, security and the acceptable use of PSC resources are documented here. Questions about any of these policies should be directed to PSC User Services.

See also policies for:

Security measures

Security is very important to PSC. These policies are intended to ensure that our machines are not misused and that your data is secure.

What you can do:

You play a significant role in security! To keep your account and PSC resources secure, please:

- Be aware of and comply with PSC’s policies on security, use and privacy found in this document

- Choose strong passwords and don’t share them between accounts or with others. More information can be found in the PSC password policies.

- Utilize your local security team for advice and assistance

- Keep your computer properly patched and protected

- Report any security concerns to the PSC help desk ASAP by calling the PSC hotline at: 412-268-4960 or email help@psc.edu

What we will never do:

- PSC will never send you unsolicited emails requesting confidential information.

- We will also never ask you for your password via an unsolicited email or phone call.

Remember that the PSC help desk is always a phone call away to confirm any correspondence at 412-268-4960.

If you have replied to an email appearing to be from PSC and supplied your password or other sensitive information, please contact the help desk immediately.

What you can expect:

- We will send you email when we need to communicate with you about service outages, new HPC resources, and the like.

- We will send you email when your password is about to expire and ask you to change it by using the web-based PSC password change utility.

Other security policies

- PSC password policies

- Users must connect to PSC machines using SSH in order to avoid remote logins with clear text passwords.

- HPN-SSH (High-Performance Networking SSH) is now the default implementation of SSH on Bridges-2 DTNs (Data Transfer Nodes).

- We vigilantly monitor our computer systems and network connections for security violations

- We are in close contact with the CERT Coordination Project with regard to possible Internet security violations

Reporting security incidents

To report a security incident you should contact our Hotline at 412-268-4960. To report non-emergency security incidents you can send email to help@psc.edu.

PSC acceptable use policy

PSC’s resources are vital to the scientific community, and we have a responsibility to ensure that all resources are utilized in a responsible manner. PSC has legal and other obligations to protect its services, resources, and the intellectual property of users. Users share this responsibility by observing the rules of acceptable use that are outlined in this document. Your on-line assent to this Acceptable Use Policy is your acknowledgment that you have read and understand your responsibilities as a user of PSC Services and Resources, which refers to all computers owned or operated by PSC and all hardware, data, software, storage systems and communications networks associated with these computers. If you have questions, please contact PSC User Services at 412-268-4960 or email help@psc.edu.

By using PSC Services and Resources associated with your allocation, you agree to comply with the following conditions of use:

- You will protect any access credentials (e.g., private keys, tokens & passwords) that are issued for your sole use by PSC and not knowingly allow any other person to impersonate or share any of your identities.

- You will not use PSC Services and Resources for any unauthorized purpose, including but not limited to:

- Financial gain

- Tampering with or obstructing PSC operations

- Breaching, circumventing administrative, or security controls

- Inspecting, modifying, distributing, or copying privileged data or software without proper authorization, or attempting to do so

- Supplying, or attempting to supply, false or misleading information or identification in order to access PSC Services and Resources

- You will comply with all applicable laws and relevant regulations, such as export control law or HIPAA.

- You will immediately report any known or suspected security breach or misuse of PSC access credentials to help@psc.edu.

- Use of PSC Services and Resources is at your own risk. There are no guarantees that PSC Services and Resources will be available, that they will suit every purpose, or that data will never be lost or corrupted. Users are responsible for backing up critical data.

- Logged information, including information provided by you for registration purposes, will be used solely for administrative, operational, accounting, monitoring and security purposes.

- Violations of this Acceptable Use Policy and/or abuse of PSC Services and Resources may result in loss of access to PSC Services and Resources. Abuse will be referred to the PSC User Services manager and/or the appropriate local, state and federal authorities, at PSC's discretion.

- PSC may terminate or restrict any user's access to PSC Services and Resources, without prior notice, if such action is necessary to maintain computing availability and security for other users of the systems.

- Allocations are awarded solely for open research, intended for publication. You will only use PSC Computing Resources to perform work consistent with the stated allocation request goals and conditions of use as defined by your approved PSC project and this Acceptable Use Policy.

- PSC is entitled to regulate, suspend or terminate your access, and you will immediately comply with their instructions.

Privacy

Pittsburgh Supercomputing Center is committed to preserving your privacy. This privacy policy explains exactly what information is collected when you visit our site and how it is used.

This policy may be modified as new features are added to the site. Any changes to the policy will be posted on this page.

- Any data automatically collected from our site visitors - domain name, browser types, etc. - are used only in aggregate to help us better meet site visitors' needs.

- There is no identification of individuals from our aggregate data. Therefore, unless you choose otherwise, you are totally anonymous when visiting our site.

- We do not share data with anyone for commercial purposes.

- If you choose to submit personally identifiable information to us electronically via the PSC feedback page, email, etc., we will treat it with the same respect for privacy afforded to mailed submissions. Submission of such information is always optional.

PSC respects individual privacy and takes great effort in supporting web site privacy policy outlined above. Please be aware, however, that we may publish URLs of other sites on our web site that may not adhere to the same policy.

To report a problem on Bridges-2, please email help@psc.edu. Please report only one problem per email; it will help us to track and solve any issues more quickly and efficiently.

Be sure to include

- an informative subject line

- your PSC username

If the question concerns a particular job, include these in addition:

- the JobID

- any error messages you received

- the date and time the job ran

- link to job scripts, output and data files

- the software being used, and versions when appropriate

- a screenshot of the error or the output file showing the error, if possible

Connecting to Bridges-2 Copy this link

Bridges-2 contains two broad categories of nodes: compute nodes, which handle the production research computing, and login nodes, which are used for managing files, submitting batch jobs and launching interactive sessions. Login nodes are not suited for production computing.

When you connect to Bridges-2, you are connecting to a Bridges-2 login node. You can connect to Bridges-2 via a web browser or through a command line interface.

See the Running Jobs section of this User Guide for information on production computing on Bridges-2.

Connect in a web browser

You can access Bridges-2 through a web browser by using the OnDemand software. You will still need to understand Bridges-2’s partition structure and the options which specify job limits, like time and memory use, but OnDemand provides a more modern, graphical interface to Bridges-2.

See the OnDemand section for more information.

Connect to a command line interface

You can connect to a traditional command line interface by logging in via SSH, using an SSH client from your local machine to connect to Bridges-2 using your PSC credentials.

SSH is a program that enables secure logins over an unsecure network. It encrypts the data passing both ways so that if it is intercepted it cannot be read.

SSH is client-server software, which means that both the user’s local computer and the remote computer must have it installed. SSH server software is installed on all the PSC machines. You must install SSH client software on your local machine.

- Free SSH clients for Macs, Windows machines, and many versions of Unix are available.

- HPN-SSH (High-Performance Networking SSH) is now the default implementation of SSH on Bridges-2 DTNs.

- HPN-SSH maximizes network throughput for data transfers over long-haul network connections for protocols that employ SSH for authentication and encryption, such as SFTP and rsync.

- Clients do not need to make any updates to enjoy improved performance.

- Clients may choose to take advantage of the additional performance offered by the dedicated HPN-SSH client.

- A command line version of SSH is installed on Macs by default; if you prefer that, you can use it in the Terminal application. You can also check with your university to see if there is an SSH client that they recommend. PSC recommends HPN-SSH.

Once you have an SSH client installed, you can use your PSC credentials to connect to Bridges-2. Note that you must create your PSC password before you can use SSH to connect to Bridges-2.

- Using your SSH client, such as HPN-SSH, connect to hostname bridges2.psc.edu using the default port (22).

- Enter your PSC username and password when prompted.

Read more about using SSH to connect to PSC systems

See the official HPN-SSH PSC docs site for more details.

Public-private keys

You can also use public-private key pairs to connect to Bridges-2. To do so, you must first fill out this form to register your keys with PSC.

Allocation administration

There are two ways to change or reset your PSC password:

- Use the web-based PSC password change utility

- Use the kpasswd command when logged into a PSC system. Do not use the passwd command.

When you change your PSC password, whether you do it via the online utility or via the kpasswd command on one PSC system, you change it on all PSC systems.

The projects command will help you monitor your allocation on Bridges-2. You can determine what Bridges-2 resources you have been allocated, your remaining balance, your allocation id (used to track usage), and more. Typing projects at the command prompt will show all your allocation ids.

This user has two Bridges-2 allocations. The default allocation, abc000000p, includes the use of Bridges-2 Regular Memory and Bridges-2 GPU resources for computing and Bridges-2 Ocean for file storage. The second one, xyz000000p, includes the use of Bridges-2 Regular Memory nodes and Ocean for storage.

Accounting for Bridges-2 use varies with the type of node used, which is determined by the resources included in your allocation: “Bridges-2 Regular Memory”, for Bridges-2’s RSM (256 and 512GB) nodes); “Bridges-2 Extreme Memory”, for Bridges-2 4TB nodes; and “Bridges-2 GPU”, for Bridges-2’s GPU nodes.

For all resources and all node types, usage is defined in terms of “Service Units” or SUs. The definition of an SU varies with the type of node being used.

Bridges-2 Regular Memory

The RM nodes are allocated as “Bridges-2 Regular Memory”. This does not include Bridges-2’s GPU nodes. Each RM node has 128 cores, each of which can be allocated separately. Service Units (SUs) are defined in terms of “core-hours”: the use of one core for 1 hour.

1 core-hour = 1 SU

Because the RM nodes each hold 128 cores, if you use one entire RM node for one hour, 128 SUs will be deducted from your allocation.

128 cores x 1 hour =128 core-hours = 128 SUs

If you don’t need all 128 cores, you can use just part of an RM node by submitting to the RM-shared partition. See more about the partitions on Bridges-2 below.

Using the RM-shared partition, if you use 2 cores on a node for 30 minutes, 1 SU will be deducted from your allocation.

2 cores x 0.5 hours = 1 core-hour = 1 SU

Bridges-2 Extreme Memory

The 4TB nodes on Bridges-2 are allocated as “Bridges-2 Extreme Memory”. Accounting is done by the cores requested for the job. Service Units (SUs) are defined in terms of “core-hours”: the use of 1 core for one hour.

1 core-hour = 1 SU

If your job requests one node (96 cores) and runs for 1 hour, 96 SUs will be deducted from your allocation.

1 node x 96 cores/node x 1 hour = 96 core-hours = 96 SUs

If your job requests 3 nodes and runs for 6 hours, 1728 SUs will be deducted from your allocation.

3 nodes x 96 cores/node x 6 hours = 1728 core-hours = 1728 SUs

Bridges-2 GPU

Bridges-2 Service Units (SUs) for GPU nodes are defined in terms of “gpu-hours”: the use of one GPU Unit for one hour.

These nodes hold 8 GPU units each, each of which can be allocated separately. Service Units (SUs) are defined in terms of GPU-hours.

- For v100 nodes, 1 GPU-hour = 1 SU

- For l40s nodes, 1 GPU-hour = 1 SU

- For h100 nodes, 1 GPU-hour = 2 SU

If you use an entire v100 GPU node for one hour, 8 SUs will be deducted from your allocation. The equivalent usage of an entire h100 GPU node for one hour would deduct 16 SUs from your allocation.

- For v100 nodes: 8 GPU units/node x 1 node x 1 hour = 8 gpu-hours = 8 SUs

- For l40s nodes: 8 GPU units/node x 1 node x 1 hour = 8 gpu-hours = 8 SUs

- For h100 nodes: 8 GPU units/node x 1 node x 1 hour = 8 gpu-hours = 16 SUs

If you don’t need all 8 GPUs, you can use just part of a GPU node by submitting to the GPU-shared partition. See more about the partitions on Bridges-2 below.

If you use the GPU-shared partition and use 4 GPU units for 48 hours…

- For v100 nodes: 4 GPU units x 48 hours = 192 gpu-hours = 192 SUs deducted from your allocation

- For l40s nodes: 4 GPU units x 48 hours = 192 gpu-hours = 192 SUs deducted from your allocation

- For h100 nodes: 4 GPU units x 48 hours = 192 gpu-hours = 384 SUs deducted from your allocation

Every Bridges-2 allocation has storage allocation associated with it on the Bridges-2 file system, Ocean. There are no SUs deducted for the space you use, but if you exceed your storage quota, you will not be able to submit jobs to Bridges-2.

Each allocation has a Unix group associated with it. Every file is “owned” by a Unix group, and that file ownership determines which allocation is charged for the file space. See “Managing multiple allocations” for a further explanation of Unix groups, and how to manage file ownership if you have more than one allocation.

You can check your Ocean usage with the projects command.

If you have multiple allocations on Bridges-2, you should ensure that the work you do for each allocation is assigned correctly to that allocation. The files created under or associated with that allocation should belong to it, to make them easier to find and use by others on the same allocation.

There are two ids associated with each allocation for these purposes: a SLURM allocation id and a Unix group id. SLURM allocation ids determine which allocation your Bridges-2 (computational) use is deducted from. Unix group ids determine which allocation the storage space for files is deducted from, and who owns and can access files or directories.

For a given allocation, the SLURM allocation id and the Unix group id are identical strings.

One of your allocations has been designated as your default allocation, and the allocation id and Unix group id associated with that allocation are your default allocation id and default Unix group id. When a Bridges-2 job runs, any SUs it uses are deducted from the allocation it runs under. Any files created by that job are owned by the Unix group associated with that allocation.

Find your default allocation id and Unix group

To find your SLURM allocation ids, use the projects command. It will display all the allocations that you have. It will also list your default SLURM allocation id in the projects output at the top. Your default Unix group id is an identical string. In this example, the user has two allocations with SLURM allocation ids abc000000p and xyz000000p. The default allocation id is abc000000p.

Use a secondary (non-default) allocation

To use an allocation other than your default allocation on Bridges-2, you must specify the appropriate allocation id with the -A option to the SLURM sbatch command. See the Running Jobs section of this Guide for more information on batch jobs, interactive sessions and SLURM. NOTE that using the -A option does not change your default Unix group. Any files created during a job are owned by your default Unix group, no matter which allocation id is used for the job, and the space they use will be deducted from the Ocean allocation for the default Unix group.

Change your Unix group for a login session

To temporarily change your Unix group, use the newgrp command. Any files created subsequently during this login session will be owned by the new group you have specified. Their storage will be deducted from the Ocean allocation of the new group. After logging out of the session, your default Unix group will be in effect again.

newgrp unix-group

NOTE that the newgrp command has no effect on the allocation id in effect. Any Bridges-2 usage will be deducted from the default allocation id or the one specified with the -A option to sbatch.

Change your default allocation id and Unix group permanently

You can permanently change your default allocation id and your default Unix group id with the change_primary_group command. Type:

change_primary_group -l

to see all your groups. Then type

change_primary_group account-id

to set account-id as your default.

Your default allocation id changes immediately. Bridges-2 use by any batch jobs or interactive sessions following this command are deducted from the new account by default.

Your default Unix group does not change immediately. It takes about an hour for the change to take effect. You must log out and log back in after that window for the new Unix group to be the default.

Tracking your usage

There are several ways to track your Bridges-2 usage: the projects command and the Grant Management System.

The projects command shows information on all Bridges-2 allocations, including usage and the Ocean directories associated with the allocation.

For more detailed accounting data you can use the Grant Management System. You can also track your usage through the ACCESS Allocations Portal. Be aware that the Grant Management System may not reflect the status of an ACCESS project renewal request.

Managing your ACCESS allocation

Most account management functions for your ACCESS allocation are handled through the ACCESS Allocations Portal. See the Manage Allocations tab for your usage. Be sure to check the RAMPS/Policies FAQ page for answers for many common questions.

The change_shell command allows you to change your default shell. This command is only available on the login nodes.

To see which shells are available, type

change_shell -l

To change your default shell, type

change_shell newshell

where newshell is one of the choices output by the change_shell -l command. You must log out and back in again for the new shell to take effect.

The policies documented here are evaluated regularly to assure adequate and responsible administration of PSC systems for users. As such, they are subject to change at any time.

PSC provides storage resources, for long-term storage and file management.

Files in a PSC storage system are retained for 3 months after the affiliated allocation has expired.

When appropriate, PSC provides refunds for jobs that failed due to circumstances beyond your control.

To request a refund, contact a PSC consultant or email help@psc.edu. In the case of batch jobs, we require the standard error and output files produced by the job. These contain information needed in order to refund the job.

There are several distinct file spaces available on Bridges-2, each serving a different function.

- $HOME, your home directory on Bridges-2

- $PROJECT, persistent file storage on Ocean. $PROJECT is a larger space than $HOME.

- $LOCAL, Scratch storage on local disk on the node running a job

- $RAMDISK, Scratch storage in the local memory associated with a running job

See PSC polices for user accounts for information about file expiration for allocations using Bridges-2.

Access to files in any Bridges-2 space is governed by Unix file permissions. If your data has additional security or compliance requirements, please contact compliance@psc.edu.

Unix file permissions

For detailed information on Unix file protections, see the man page for the chmod (change mode) command.

To share files with your group, give the group read and execute access for each directory from your top-level directory down to the directory that contains the files you want to share.

chmod g+rx directory-name

Then give the group read and execute access to each file you want to share.

chmod g+rx filename

To give the group the ability to edit or change a file, add write access to the group:

chmod g+rwx filename

Access Control Lists

If you want more fine-grained control than Unix file permissions allow —for example, if you want to give only certain members of a group access to a file, but not all members—then you need to use Access Control Lists (ACLs). Suppose, for example, that you want to give janeuser read access to a file in a directory, but no one else in the group.

Use the setfacl (set file acl) command to give janeuser read and execute access on the directory:

setfacl -m user:janeuser:rx directory-name

for each directory from your top-level directory down to the directory that contains the file you want to share with janeuser. Then give janeuser access to a specific file with

setfacl -m user:janeuser:r filename

User janeuser will now be able to read this file, but no one else in the group will have access to it.

To see what ACLs are set on a file, use the getfacl (get file acl) command.

There are man pages for chmod, setfacl and getfacl.

$HOME

This is your Bridges-2 home directory. It is the usual location for your batch scripts, source code and parameter files. Its path is /jet/home/username, where username is your PSC username. You can refer to your home directory with the environment variable $HOME. Your home directory is visible to all of Bridges-2’s nodes.

Your home directory is backed up daily, although it is still a good idea to store copies of your important files in another location, such as the Ocean file system or on a local file system at your site. If you need to recover a home directory file from backup send email to help@psc.edu. The process of recovery will take 3 to 4 days.

$HOME quota

Your home directory has a 25GB quota. You can check your home directory usage using the my_quotas command. To improve the access speed to your home directory files you should stay as far below your home directory quota as you can.

File expiration

See PSC polices for user accounts for information about file expiration for allocations using Bridges-2.

$PROJECT

$PROJECT is persistent file storage. It is larger than your space in $HOME. Be aware that $PROJECT is NOT backed up.

The path of your Ocean home directory is /ocean/projects/groupname/PSC-username, where groupname is the Unix group id associated with your allocation and PSC–username is your PSC username. Use the id command to find your group name.

The command id -Gn will list all the Unix groups you belong to.

The command id -gn will list the Unix group associated with your current session.

If you have more than one allocation, you will have a $PROJECT directory for each allocation. Be sure to use the appropriate directory when working with multiple allocations.

File expiration

See PSC polices for user accounts for information about file expiration for allocations using Bridges-2.

$PROJECT quota

Storage quota

Your usage quota for each of your allocations is the amount of Ocean storage you received when your proposal was approved. If your total use in Ocean exceeds this quota you won’t be able to run jobs on Bridges-2 until you are under quota again.

Use the my_quotas or projects command to check your Ocean usage.

If you have multiple allocations, it is very important that you store your files in the correct $PROJECT directory.

Inode quota

In order to best serve all Bridges-2 users, an inode quota has been established for $PROJECT. It will be enforced in addition to the storage quota for your allocation. The inode quota is proportional to the size of your storage quota, and is set at 6070 inodes per GB of storage allocated. There is currently no inode quota on home directories in the Jet file system.

Inodes are data structures that contain metadata about a file, such as the file size, user and group ids associated with the file, permission settings, time stamps, and more. Each file has at least one inode associated with it.

To view your usage on Bridges-2, use the my_quotas command which shows your limits as well as your current usage.

[user@bridges2-login013 ~]$ my_quotas The quota for project directory /ocean/projects/abcd1234 Storage quota: 9.766T Storage used: 1.384T Inode quota: 60,700,000 Inodes used: 453,596

Tips to reduce your inode usage:

- Delete files which are no longer needed

- Combine small files into one larger file via tools such as zip or tar

Should you need to increase your storage quota or inode limit, please submit a supplement request via the ACCESS allocation system. If you have questions, please email help@psc.edu.

$LOCAL

Each of Bridges-2’s nodes has a local file system attached to it. This local file system is only visible to the node to which it is attached, and provides fast access to local storage.

In a running job, this file space is available as $LOCAL.

If your application performs a lot of small reads and writes, then you could benefit from using this space.

Node-local storage is only available when your job is running, and can only be used as working space for a running job. Once your job finishes, any files written to $LOCAL are inaccessible and deleted. To use local space, copy files to it at the beginning of your job and back out to a persistent file space before your job ends.

If a node crashes all the node-local files are lost. You should checkpoint theses files by copying them to Ocean during long runs.

$LOCAL size

The maximum amount of local space varies by node type.

To check on your local file space usage type:

du -sh

No Service Units accrue for the use of $LOCAL.

Using $LOCAL

To use $LOCAL you must first copy your files to $LOCAL at the beginning of your script, before your executable runs. The following script is an example of how to do this

RC=1

n=0

while [[ $RC -ne 0 && $n -lt 20 ]]; do

rsync -aP $sourcedir $LOCAL/

RC=$?

let n = n + 1

sleep 10

done

Set $sourcedir to point to the directory that contains the files to be copied before you call your executable. This code will try at most 20 times to copy your files. If it succeeds, the loop will exit. If an invocation of rsync was unsuccessful, the loop will try again and pick up where it left off.

At the end of your job you must copy your results back from $LOCAL or they will be lost. The following script will do this.

mkdir $PROJECT/results

RC=1

n=0

while [[ $RC -ne 0 && $n -lt 20 ]]; do

rsync -aP $LOCAL/ $PROJECT/results

RC=$?

let n = n + 1

sleep 10

done

This code fragment copies your files to a directory in your Ocean file space named results, which you must have created previously with the mkdir command. It will loop at most 20 times and stop if it is successful.

$RAMDISK

You can use the memory allocated for your job for IO rather than using disk space. In a running job, the environment variable $RAMDISK will refer to the memory associated with the nodes in use.

The amount of memory space available to you depends on the size of the memory on the nodes and the number of nodes you are using. You can only perform IO to the memory of nodes assigned to your job.

If you do not use all of the cores on a node, you are allocated memory in proportion to the number of cores you are using. Note that you cannot use 100% of a node’s memory for IO; some is needed for program and data usage.

This space is only available to you while your job is running, and can only be used as working space for a running job. Once your job ends this space is inaccessible and files there are deleted. To use $RAMDISK, copy files to it at the beginning of your job and back out to a permanent space before your job ends. If your job terminates abnormally, files in $RAMDISK are lost.

Within your job you can cd to $RAMDISK, copy files to and from it, and use it to open files. Use the command du -sh to see how much space you are using.

If you are running a multi-node job the $RAMDISK variable points to the memory space on the node that is running your rank 0 process.

Several methods are available to transfer files into and from Bridges-2.

Note: File transfers can no longer be initiated from the Bridges-2 login nodes.

- File transfers should use the Data Transfer Nodes (DTN): data.bridges2.psc.edu

- The DTNs are specifically built to be high-speed data connectors.

- All file transfers must be initiated from your local machine using the DTN nodes.

- Using the DTNs prevents file transfers from disrupting interactive use on Bridges-2’s login nodes.

Paths for Bridges-2 file spaces

To copy files into any of your Bridges-2 spaces, you need to know the path to that space on Bridges-2. The start of the full paths for your Bridges-2 directories are:

Home directory /jet/home/PSC–username

Ocean directory /ocean/projects/groupname/PSC-username

where PSC-username is your PSC username and groupname is the Unix group id associated with your allocation. To find your groupname, use the command id -Gn. All of your valid groupnames will be listed. You have an Ocean directory for each allocation you have.

Transfers into your Bridges-2 home directory

Your home directory quota is 25GB. More space is available in your $PROJECT file space in Ocean. Exceeding your home directory quota will prevent you from writing more data into your home directory and will adversely impact other operations you might want to perform.

Commands to transfer files

You can use rsync, scp, sftp or Globus to copy files to and from Bridges-2.

rsync

You can use the rsync command to copy files to and from Bridges-2. Always use rsync from your local machine, whether you are copying files to Bridges-2 from your local machine, or copying files to your local machine from Bridges-2.

A sample rsync command to copy a file from your local machine to a Bridges-2 directory is

rsync -rltpDvp -e 'ssh -l PSC-username' source_directory data.bridges2.psc.edu:target_directory

A sample rsync command to copy a file from Bridges-2 to your local machine is

rsync -rltpDvp -e 'ssh -l PSC-username' data.bridges2.psc.edu:source_directory target_directory

Notes:

- In both cases, substitute your username for ‘username‘.

- Make sure you use the correct groupname in your target directory.

- If you are using HPN-SSH as your client, substitute “hpnssh” instead of “ssh” in those commands.

- By default, rsync will not copy older files with the same name in place of newer files in the target directory — It will overwrite older files.

We recommend the rsync options -rltDvp. See the rsync man page for information on these options and other options you might want to use. We also recommend the option

-oMACS=umac-64@openssh.com

If you use this option, your transfer will use a faster data validation algorithm.

You may want to put your rsync command in a loop to insure that it completes. A sample loop is

RC=1 n=0 while [[ $RC -ne 0 && $n -lt 20 ]] do rsync source-file target-file RC = $? let n = n + 1 sleep 10 done

This loop will try your rsync command 20 times. If it succeeds it will exit. If an rsync invocation is unsuccessful the system will try again and pick up where it left off. It will copy only those files that have not already been transferred. You can put this loop, with your rsync command, into a batch script and run it with sbatch.

scp

To use scp for a file transfer you must specify a source and destination for your transfer. The format for either source or destination is

username@machine-name:path/filename

For transfers involving Bridges-2, username is your PSC username. Use data.bridges2.psc.edu for the machine-name. This is the name for the Data Transfer Node, a high-speed data connector at PSC. We recommend using it for all file transfers using scp involving Bridges-2. Using it prevents file transfers from disrupting interactive use on Bridges-2’s login nodes.

File transfers using scp must specify full paths for Bridges-2 file systems. See Paths for Bridges-2 file spaces for details.

sftp

To use sftp, first connect to the remote machine:

sftp username@machine-name

When Bridges-2 is the remote machine, use your PSC username as username. The Bridges-2 machine-name should be specified as data.bridges2.psc.edu. This is the name for the Data Transfer Nodes (DTN), a high-speed data connector at PSC. We recommend using it for all file transfers using sftp involving Bridges-2. Using it prevents file transfers from disrupting interactive use on Bridges-2’s login nodes.

You will be prompted for your password on the remote machine. If Bridges-2 is the remote machine, enter your PSC password.

You can then enter sftp subcommands, like put to copy a file from the local system to the remote system, or get to copy a file from the remote system to the local system.

To copy files into Bridges-2, you must either cd to the proper directory or use full pathnames in your file transfer commands. See Paths for Bridges-2 file spaces for details.

Globus

Globus can be used for any file transfer to Bridges-2. It tracks the progress of the transfer and retries when there is a failure; this makes it especially useful for transfers involving large files or many files.

To use Globus to transfer files you must authenticate either via a Globus account or with InCommon credentials.

To use a Globus account for file transfer, set up a Globus account at the Globus site.

To use InCommon credentials to transfer files to/from Bridges-2, you must first provide your ePPN information to PSC. Follow these steps:

- Find your ePPN

- Navigate to https://cilogon.org/ in your web browser.

- Select your institution from the Select an Identity Provider list.

- Click the Log On button. You will be taken to the web login page for your institution.

- Login with your username and password for your institution.

- If your institution has an additional login requirement (e.g., Duo), authenticate that as well.

- After successfully authenticating to your institution’s web login interface, you will be returned to the CILogon webpage.

- Click the User Attributes drop down link to find the ‘ePPN’.

- Send your ePPN to PSC

- From the User Attributes dropdown on the CILogon webpage, select and copy the ePPN text field, typically formated like an e-mail address, with an account name @ some domain. If your CILogon User Attributes ePPN is blank, please let us know.

- Email help@psc.edu, pasting your copied ePPN into the message. Ask that the ePPN be mapped to your PSC username for GridFTP data transfers.

Your CILogon information will be added within one business day, and you will be able to begin transferring files to and from Bridges-2.

Globus endpoints

Once you have the proper authentication you can initiate file transfers from the Globus site. A Globus transfer requires a Globus endpoint, a file path and a file name for both the source and destination.

When using Globus GridFTP for data transfers to/from Bridges-2, please select the endpoint labelled: “PSC Bridges-2 /ocean and /jet filesystems”.

These endpoints are owned by psc@globusid.org. You must always specify a full path for the Bridges-2 file systems. See Paths for Bridges-2 file spaces for details.

You can transfer files from a Bridges-2 allocation that is expiring to a new allocation by moving files to a directory belonging to the new allocation and changing the file ownership.

Move the files to a new directory

Use the mv, rsync, or scp commands to move files from one directory to another.

To move a file from a directory test in the $PROJECT directory of your expiring allocation to directory previous-results of of your $PROJECT space under your new allocation, type:

mv /ocean/projects/old-groupid/PSC-username/test/file1 /ocean/projects/new-groupid/PSC-username/previous-results/file1

If you are in the test directory of the expiring allocation, the command may be simplified to

mv file1 /ocean/projects/new-groupid/PSC-username/previous-results/file1

Note that this will remove the file from your expiring allocation’s file space, rather than make a copy.

See the Transferring Files section of this User Guide for information on the rsync and scp commands.

Change the file ownership

You must also change the Unix group of any files moved into a different allocation’s file space in order to access them under the new allocation. Use the chgrp command to do this. Type:

chgrp new-group filename

To change the group ownership of an entire directory, type:

chgrp -R new-group directory-name

See the Managing Multiple Allocations section of this User Guide for an explanation of allocation ids and Unix groups and how to find them.

Bridges-2 provides a rich programming environment for the development of applications.

C, C++ and Fortran

AMD (AOCC), Intel, Gnu and NVIDIA HPC compilers for C, C++ and Fortan are available on Bridges-2. Be sure to load the module for the compiler set that you want to use. Once the module is loaded, you will have access to the compiler commands:

| Compiler command for | ||||

|---|---|---|---|---|

| Module name | C | C++ | Fortran | |

| AMD | aocc | clang | clang++ | flang |

| Intel | intel | icc | icpc | ifort |

| Intel (LLVM) | intel-oneapi | icx | icpx | ifx |

| Gnu | gcc | gcc | g++ | gfortran |

| NVIDIA | nvhpc | nvcc | nvc++ | nvfortran |

Compiler options

AMD provides a Compiler Options Quick Reference Guide for AMD, Gnu and Intel compilers on their EPYC processors.

There are man pages for each of the compilers.

See also:

- AMD Optimizing C/C++ Compiler (AOCC)

- NVIDIA compilers web site

- GNU compilers web site

- Module documentation for information on what modules are available and how to use them.

OpenMP programming

To compile OpenMP programs you must add an option to your compile command:

| Compiler | Option |

|---|---|

| Intel | -qopenmp for example: icc -qopenmp yprog.c |

| Intel (LLVM) | -fopenmp OR -qopenmp for example: icx -fopenmp yprog.c Check ifx -help or icx -help for more details |

| Gnu | -fopenmp for example: gcc -fopenmp myprog.c |

| NVIDIA | -mp for example: nvcc -mp myprog.c |

See also:

MPI programming

Three types of MPI are supported on Bridges-2: MVAPICH2, OpenMPI and Intel MPI. The three MPI types may perform differently on different problems or in different programming environments. If you are having trouble with one type of MPI, please try using another type. Contact help@psc.edu for more help.

To compile an MPI program, you must:

- load the module for the compiler that you want

- load the module for the MPI type you want to use – be sure to choose one that uses the compiler that you are using. The module name will distinguish between compilers.

- issue the appropriate MPI wrapper command to compile your program

To run your previously compiled MPI program, you must load the same MPI module that was used in compiling.

To see what MPI versions are available, type module avail mpi or module avail mvapich2. Note that the module names include the MPI family and version (“openmpi/4.0.2”), followed by the associated compiler and version (“intel20.4”). (Modules for other software installed with MPI are also shown.)

Wrapper commands

| To use the Intel compilers with | Load an intel module plus | Compile with this wrapper command | ||

|---|---|---|---|---|

| C | C++ | Fortran | ||

| Intel MPI | intelmpi/version-intelversion | mpiicc

note the “ii” |

mpiicpc

note the “ii” |

mpiifort

note the “ii” |

| Intel MPI (LLVM) | intel-oneapi/version-intelversion

Note: Loading intel-oneapi will also load all the dependencies. |

mpiicx

note the “ii” |

mpiicpx

note the “ii” |

mpiifx OR

mpiifort -fc=ifx note the “ii” |

| OpenMPI | openmpi/version-intelversion | mpicc | mpicxx | mpifort |

| MVAPICH2 | mvapich2/version-intelversion | mpicc code.c -lifcore | mpicxx code.cpp -lifcore | mpifort code.f90 -lifcore |

| To use the Gnu compilers with | Load a gcc module plus | Compile with this command | ||

|---|---|---|---|---|

| C | C++ | Fortran | ||

| OpenMPI | openmpi/version-gccversion | mpicc | mpicxx | mpifort |

| MVAPICH2 | mvapich2/version-gccversion | mpicc | mpicxx | mpifort |

| To use the NVIDIA compilers with | Load an nvhpc module plus | Compile with this command | ||

|---|---|---|---|---|

| C | C++ | Fortran | ||

| OpenMPI | openmpi/version-nvhpcversion | mpicc | mpicxx | mpifort |

| MVAPICH2 | Not available | |||

Custom task placement with Intel MPI

If you wish to specify custom task placement with Intel MPI (this is not recommended), you must set the environment variable I_MPI_JOB_RESPECT_PROCESS_PLACEMENT to 0. Otherwise the mpirun task placement settings you give will be ignored. The command to do this is:

For the BASH shell:

export I_MPI_JOB_RESPECT_PROCESS_PLACEMENT=0

For the CSH shell:

setenv I_MPI_JOB_RESPECT_PROCESS_PLACEMENT 0

See also:

- Intel MPI web site

- MVAPICH2 web site

- OpenMPI web site

- Module documentation for information on what modules are available and how to use them.

Other languages

Other languages, including Java, Python, R, and MATLAB, are available. See the software page for information.

Debugging and performance analysis

DDT is a debugging tool for C, C++ and Fortran 90 threaded and parallel codes. It is client-server software. Install the client on your local machine and then you can access the GUI on Bridges-2 to debug your code.

See the DDT page for more information.

Collecting performance statistics

In order to collect performance statistics, you must use the -C PERF option to the sbatch command. Note that this can only be done in RM partitions in which jobs do not share a node with other jobs: RM and RM-512 partitions. See the sbatch section of this User Guide for more information on the options available with the sbatch command.

Bridges-2 has a broad collection of applications installed. See the list of software installed on Bridges-2.

Typing bioinformatics on Bridges-2 will list all of the biological science software that is installed .

PSC has built some environments which provide a rich, unified, Anaconda-based environment for AI, Machine Learning, and Big Data applications. Each environment includes several popular AI/ML/BD packages, selected to work together well. See the section on AI software environments in this User Guide for more information.

Additional software may be installed by request. If you feel that you need particular software for your research, please send a request to help@psc.edu.

All production computing must be done on Bridges-2's compute nodes, NOT on Bridges-2's login nodes. The SLURM scheduler (Simple Linux Utility for Resource Management) manages and allocates all of Bridges-2's compute nodes. Several partitions, or job queues, have been set up in SLURM to allocate resources efficiently.

To run a job on Bridges-2, you need to decide how you want to run: interactively, in batch, or through OnDemand; and where to run - that is, which partitions you are allowed to use.

What are the different ways to run a job?

You can run jobs in Bridges-2 in several ways:

- interactive sessions - where you type commands and receive output back to your screen as the commands complete

- batch mode - where you first create a batch (or job) script which contains the commands to be run, then submit the job to be run as soon as resources are available

- through OnDemand - a browser interface that allows you to run interactively, or create, edit and submit batch jobs and also provides a graphical interface to tools like RStudio, Jupyter notebooks, and IJulia, More information about OnDemand is in the OnDemand section of this user guide.

Regardless of which way you choose to run your jobs, you will always need to choose a partition to run them in.

Which partitions can I use?

Different partitions control different types of Bridges-2's resources; they are configured by the type of node they control, along with other job requirements like how many nodes or how much time or memory is needed. Your access to the partitions is based on the resources included in your Bridges-2 allocation: "Bridges-2 Regular Memory", "Bridges-2 Extreme Memory", or “Bridges-2 GPU". Your allocation may include more than one resource; in that case, you will have access to more than one set of partitions.

You can see which of Bridges-2's resources that you have been allocated with the projects command. See section "The projects command" in the Account Administration section of this User Guide for more information.

You can do your production work interactively on Bridges-2, typing commands on the command line, and getting responses back in real time. But you must be allocated the use of one or more Bridges-2's compute nodes by SLURM to work interactively on Bridges-2. You cannot use Bridges-2's login nodes for your work.

You can run an interactive session in any of the RM or GPU partitions. You will need to specify which partition you want, so that the proper resources are allocated for your use.

Note

You cannot run an interactive session in the EM partition.

If all of the resources set aside for interactive use are in use, your request will wait until the resources you need are available. Using a shared partition (RM-shared, GPU-shared) will probably allow your job to start sooner.

To start an interactive session, use the command interact. The format is:

interact -options

The simplest interact command is

interact

This command will start an interactive job using the defaults for interact, which are

Partition: RM-shared

Cores: 1

Time limit: 60 minutes

If you want to run in a different partition, use more than one core, multiple nodes, or set a different time limit, you will need to use options to the interact command. See the Options for interact section of this User Guide below.

Once the interact command returns with a command prompt you can enter your commands. The shell will be your default shell. When you are finished with your job, type CTRL-D.

[user@bridges2-loginr01 ~]$ interact A command prompt will appear when your session begins "Ctrl+d" or "exit" will end your session [user@r004 ~]

Notes:

- Be sure to use the correct allocation id for your job if you have more than one allocation. See "Managing multiple allocations".

- Service Units (SU) accrue for your resource usage from the time the prompt appears until you type CTRL-D, so be sure to type CTRL-D as soon as you are done.

- The maximum time you can request is 8 hours. Inactive interact jobs are logged out after 30 minutes of idle time.

- By default,

interactuses the RM-shared partition. Use the-poption for interact to use a different partition.

If you want to run in a different partition, use more than one core or set a different time limit, you will need to use options to the interact command. Available options are given below.

| Option | Description | Default value |

|---|---|---|

-p partition |

Partition requested | RM-small |

-t HH:MM:SS |

Walltime requested The maximum time you can request is 8 hours. |

60:00 (1 hour) |

-N n This is only valid for the RM, RM-512 and GPU partitions |

Number of nodes requested | 1 |

--ntasks-per-node=n Note the "--" for this option |

Number of cores to allocate per node | 1 |

-n NTasks

|

Number of tasks spread over all nodes | N/A |

--gres=gpu:type:n Note the "--" for this option |

Specifies the type and number of GPUs requested. Valid choices for 'type' are v100-16, v100-32, l40s-48, and h100-80. See the GPU partitions section of this User Guide for an explanation of the GPU types. Valid choices for 'n' are 1-8 |

N/A |

-A allocation-id |

SLURM allocation id for the job Find or change your default allocation id Note: Files created during a job will be owned by the Unix group in effect when the job is submitted. This may be different than the allocation id for the job. See the discussion of the |

Your default allocation id |

-R reservation-name |

Reservation name, if you have one Use of -R does not automatically set any other interact options. You still need to specify the other options (partition, walltime, number of nodes) to override the defaults for the interact command. If your reservation is not assigned to your default account, then you will need to use the -A option when you issue your interact command. |

N/A |

-h |

Help, lists all the available command options | N/A |

See also

- Bridges-2 partitions

- How to determine your valid SLURM allocation ids and Unix groups and change your default, in the Account Adminstration section of this User Guide

- Managing multiple allocations

- The

sruncommand, for more complex control over your interactive job

Instead of working interactively on Bridges-2, you can instead run in batch. This means you will

- create a file called a batch or job script

- submit that script to a partition (queue) using the

sbatchcommand - wait for the job's turn in the queue

- if you like, check on the job's progress as it waits in the partition and as it is running

- check the output file for results or any errors when it finishes

A simple example

This section outlines an example which submits a simple batch job. More detail on batch scripts, the sbatch command and its options follow.

Create a batch script

Use any editor you like to create your batch scripts. A simple batch script named hello.job which runs a "hello world" command is given here. Comments, which begin with '#', explain what each line does.

The first line of any batch script must indicate the shell to use for your batch job.

#!/bin/bash # use the bash shell set -x # echo each command to standard out before running it date # run the Unix 'date' command echo "Hello world, from Bridges-2!" # run the Unix 'echo' command

Submit the batch script to a partition

Use the sbatch command to submit the hello.job script.

[joeuser@login005 ~]$ sbatch hello.job Submitted batch job 7408623

Note the jobid that is echoed back to you when the job is submitted. Here it is 7408623.

Check on the job progress

You can check on the job's progress in the partition by using the squeue command. By default you will get a list of all running and queued jobs. Use the -u option with your PSC username to see only your jobs. See the squeue command for details.

[joeuser@login005 ~]$ squeue -u joeuser JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON) 7408623 RM hello.jo joeuser PD 0:08 1 r7320:00

The status "PD" (pending) in the output here shows that job 7408623 is waiting in the queue. See more about the squeue command below.

When the job is done, squeue will no longer show it:

[joeuser@login005 ~]$ squeue -u joeuser JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

Check the output file when the job is done

By default, the standard output and error from a job are saved in a file with the name slurm-jobid.out, in the directory that the job was submitted from.

[joeuser@login005 ~]$ more slurm-7408623.out + date Sun Jan 19 10:27:06 EST 2020 + echo 'Hello world, from Bridges-2!' Hello world, from Bridges-2! [joeuser@login005 ~]$

To submit a batch job, use the sbatch command. The format is

sbatch -options batch-script

The options to sbatch can either be in your batch script or on the sbatch command line. Options in the command line override those in the batch script.

Note:

- Be sure to use the correct allocation id if you have more than one allocation. Please see the -A option for sbatch to change the SLURM allocation id for a job. Information on how to determine your valid allocation ids and change your default allocation id is in the Account adminstration section of this User Guide.

- In some cases, the options for sbatch differ from the options for interact or srun.

- By default, sbatch submits jobs to the RM partition. Use the -p option for sbatch to direct your job to a different partition

For more information about these options and other useful sbatch options see the sbatch man page.

| Option | Description | Default |

|---|---|---|

-p partition |

Partition requested | RM |

-t HH:MM:SS |

Walltime requested in HH:MM:SS | 30 minutes |

-N n |

Number of nodes requested. | 1 |

-n n |

Number of cores requested in total. | None |

--ntasks-per-node=n Note the "--" for this option |

Request n cores be allocated per node. | 1 |

-o filename |

Save standard out and error in filename. This file will be written to the directory that the job was submitted from. | slurm-jobid.out |

--gpus=type:n Note the "--" for this option |

Specifies the number of GPUs requested. 'type' specifies the type of GPU you are requesting. Valid types are v100-16, v100-32, l40s-48, and h100-80. See the GPU partitions section of this User Guide for information on the GPU types. 'n' is the total number of GPUs requested for this job. |

N/A |

-A allocation-id |

SLURM allocation id for the job. If not specified, your default allocation id is used. Find your default SLURM allocation id. Note: Files created during a job will be owned by the Unix group in effect when the job is submitted. This may be different than the allocation id used by the job. See the discussion of the |

Your default allocation id |

-C constraints |

Specifies constraints which the nodes allocated to this job must satisfy. Valid constraints are:

See the discussion of the -C option in the sbatch man page for more information. |

N/A |

--res reservation-name Note the "--" for this option |

Use the reservation that has been set up for you. Use of --res does not automatically set any other options. You still need to specify the other options (partition, walltime, number of nodes) that you would in any sbatch command. If your reservation is not assigned to your default account then you will need to use the -A option to sbatch to specify the account. | N/A |

--mail-type=type Note the "--" for this option |

Send email when job events occur, where type can be BEGIN, END, FAIL or ALL. | N/A |

--mail-user=PSC-username Note the "--" for this option |

User to send email to as specified by -mail-type. Default is the user who submits the job. | N/A |

-d=dependency-list |

Set up dependencies between jobs, where dependency-list can be:

|

N/A |

--no-requeue Note the "--" for this option |

Specifies that your job will be not be requeued under any circumstances. If your job is running on a node that fails it will not be restarted. Note the "--" for this option. | N/A |

--time-min=HH:MM:SS Note the "--" for this option. |

Specifies a minimum walltime for your job in HH:MM:SS format. SLURM considers the walltime requested when deciding which job to start next. Free slots on the machine are defined by the number of nodes and how long those nodes are free until they will be needed by another job. By specifying a minimum walltime you allow the scheduler to reduce your walltime request to your specified minimum time when deciding whether to schedule your job. This could allow your job to start sooner. If you use this option your actual walltime assignment can vary between your minimum time and the time you specified with the -t option. If your job hits its actual walltime limit, it will be killed. When you use this option you should checkpoint your job frequently to save the results obtained to that point. |

N/A |

-h |

Help, lists all the available command options |

See also

- Bridges-2 partitions

- How to determine your valid allocation ids and change your defaults, in the Account administration section of this User Guide

- Managing multiple allocations

Managing multiple allocations

If you have more than one allocation, be sure to use the correct SLURM allocation id and Unix group when running jobs.

See "Managing multiple allocations" in the Account Administration section of this User Guide to see how to find your allocation ids and Unix groups and determine or change your defaults.

Permanently change your default SLURM allocation id and Unix group

See the change_primary_group command in the "Managing multiple allocations" in the Account Administration section of this User Guide to permanently change your default SLURM allocation id and Unix group.

Temporarily change your SLURM allocation id or Unix group

See the -A option to the sbatch or interact commands to set the SLURM allocation id for a specific job.

The newgrp command will change your Unix group for that login session only. Note that any files created by a job are owned by the Unix group in effect when the job is submitted, which is not necessarily the same as the allocation id used for the job. See the newgrp command in the Account Administration section of this User Guide to see how to change the Unix group currently in effect.

Each SLURM partition manages a subset of Bridges-2's resources. Each partition allocates resources to interactive sessions, batch jobs, and OnDemand sessions that request resources from it.

Not all partitions may be open to you. The resources included in your Bridges-2 allocations determine which partitions you can submit jobs to.

An allocation including "Bridges-2 Regular Memory" allows you to use Bridges-2's RM (256 and 512GB) nodes. The RM, RM-shared and RM-512 partitions handle jobs for these nodes.

An allocation including "Bridges-2 Extreme Memory" allows you to use Bridges-2’s 4TB EM nodes. The EM partition handles jobs for these nodes.

An allocation including "Bridges-2 GPU" allows you to use Bridges-2's GPU nodes. The GPU and GPU-shared partitions handle jobs for these nodes.

All the partitions use FIFO scheduling. If the top job in the partition will not fit, SLURM will try to schedule the next job in the partition. The scheduler follows policies to ensure that one user does not dominate the machine. There are also limits to the number of nodes and cores a user can simultaneously use. Scheduling policies are always under review to ensure best turnaround for users.

#!/bin/bash #SBATCH -N 1 #SBATCH -p RM #SBATCH -t 5:00:00 #SBATCH --ntasks-per-node=128 # type 'man sbatch' for more information and options # this job will ask for 1 full RM node (128 cores) for 5 hours # this job would potentially charge 640 RM SUs #echo commands to stdout set -x # move to working directory # this job assumes: # - all input data is stored in this directory # - all output should be stored in this directory # - please note that groupname should be replaced by your groupname # - PSC-username should be replaced by your PSC username # - path-to-directory should be replaced by the path to your directory where the executable is cd /ocean/projects/groupname/PSC-username/path-to-directory # run a pre-compiled program which is already in your project space ./a.out

#!/bin/bash #SBATCH -N 1 #SBATCH -p RM-shared #SBATCH -t 5:00:00 #SBATCH --ntasks-per-node=64 # type 'man sbatch' for more information and options # this job will ask for 64 cores in RM-shared and 5 hours of runtime # this job would potentially charge 320 RM SUs #echo commands to stdout set -x # move to working directory # this job assumes: # - all input data is stored in this directory # - all output should be stored in this directory # - please note that groupname should be replaced by your groupname # - PSC-username should be replaced by your PSC username # - path-to-directory should be replaced by the path to your directory where the executable is cd /ocean/projects/groupname/PSC-username/path-to-directory # run a pre-compiled program which is already in your project space ./a.out

Sample batch script for a job in the RM-512 partition

Sample batch script for a job in the RM-512 partition

#!/bin/bash #SBATCH -N 1 #SBATCH -p RM-512 #SBATCH -t 5:00:00 #SBATCH --ntasks-per-node=128 # type 'man sbatch' for more information and options # this job will ask for 1 full RM 512GB node (128 cores) for 5 hours # this job would potentially charge 640 RM SUs #echo commands to stdout set -x # move to working directory # this job assumes: # - all input data is stored in this directory # - all output should be stored in this directory # - please note that groupname should be replaced by your groupname # - PSC-username should be replaced by your PSC username # - path-to-directory should be replaced by the path to your directory where the executable is cd /ocean/projects/groupname/PSC-username/path-to-directory # run a pre-compiled program which is already in your project space ./a.out

Summary of partitions for Bridges-2 RM nodes

| RM | RM-shared | RM-512 | |

|---|---|---|---|

| Node RAM | 256GB | 256GB | 512GB |

| Node count default | 1 | NA Only one node per job is allowed in the RM-shared partition |

1 |

| Node count max | 64 | NA Only one node per job is allowed in the RM-shared partition |

2 |

| Core count default | 128 | 1 | 128 |

| Core count max | 6400 | 64 | 256 |

| Walltime default | 1 hour | 1 hour | 1 hour |

| Walltime max | 72 hours | 72 hours | 72 hours |

The EM partition should be used for allocations including “Bridges-2 Extreme Memory” .

Use the appropriate allocation id for your jobs: If you have more than one Bridges-2 allocation, be sure to use the correct SLURM allocation id for each job. See “Managing multiple allocations”.

For information on requesting resources and submitting jobs see the discussion of the interact or sbatch commands.

Jobs in the EM partition

- run on Bridges-2’s EM nodes, which have 4TB of memory and 96 cores per node

- can use at most one full EM node

- must specify the number of cores to use

- must use a multiple of 24 cores. A job can request 24, 48, 72 or 96 cores.

When submitting a job to the EM partition, you can request:

- the number of cores

- the walltime limit

Your job will be allocated memory in proportion to the number of cores you request. Be sure to request enough cores to be allocated the memory that your job needs. Memory is allocated at about 1TB per 24 cores. As an example, if your job needs 2TB of memory, you should request 48 cores.

If you do not specify the number of cores or time limit, you will get the defaults. See the summary table for the EM partition below for the defaults.

Note

You cannot submit an interactive job to the EM partition.You cannot use the EM partition through OnDemand.

Sample sbatch command for the EM partition

An example of a sbatch command to submit a job to the EM partition, requesting an entire node for 5 hours is

sbatch -p EM -t 5:00:00 --ntasks-per-node=96 myscript.job

where:

-p indicates the intended partition

-t is the walltime requested in the format HH:MM:SS

--ntasks-per-node is the number of cores requested per node

myscript.job is the name of your batch script

Sample job script for the EM partition

#!/bin/bash #SBATCH -N 1 #SBATCH -p EM #SBATCH -t 5:00:00 #SBATCH -n 96 # type 'man sbatch' for more information and options # this job will ask for 1 full EM node (96 cores) and 5 hours of runtime # this job would potentially charge 480 EM SUs # echo commands to stdout set -x # move to working directory # this job assumes: # - all input data is stored in this directory # - all output should be stored in this directory # - please note that groupname should be replaced by your groupname # - PSC-username should be replaced by your PSC username # - path-to-directory should be replaced by the path to your directory where the executable is cd /ocean/projects/groupname/PSC-username/path-to-directory #run pre-compiled program which is already in your project space ./a.out

Summary of the EM partition

| EM partition | |

|---|---|

| Node | 96 cores/node 4TB/node |

| Node max | 1 |

| Core default | None |

| Core min | 24 |

| Core max | 96 |

| Walltime default | 1 hour |

| Walltime max | 120 hours (5 days) |

| Memory | 1TB per 24 cores |

Jobs in the GPU and GPU-shared partitions run on the GPU nodes and are available for allocations including "Bridges-2 GPU".

For information on requesting resources and submitting jobs see the interact or sbatch commands.

Use the appropriate allocation id for your jobs: If you have more than one Bridges-2 allocation, be sure to use the correct SLURM allocation id for each job. See “Managing multiple allocations”.

Jobs in the GPU partition can use more than one node. Jobs in the GPU partition do not share nodes, so jobs are allocated all the cores and all of the GPUs associated with the nodes assigned to them . Your job will incur SU costs for all of the cores on your assigned nodes. The memory space across nodes is not integrated. The cores within a node access a shared memory space, but cores in different nodes do not.

Jobs in the GPU-shared partition use only part of one node. Because SUs are calculated using how many gpus are used, using only part of a node will result in a smaller SU charge.

GPU types

Bridges-2 has four types of GPU nodes: h100-80, l40s-48, v100-32, and v100-16. The -80, -48, -32, or -16 in each type indicates the amount of GPU memory per GPU on the node. All node types can be used in all GPU partitions.

h100-80 nodes

- There are ten h100-80 nodes containing eight H100 GPUs, each with 80GB of GPU memory. These nodes have 2TB RAM per node.

l40s-48 nodes

- There are three l40s-48 nodes containing eight L40S GPUs, each with 48GB of GPU memory. These nodes have 1TB RAM per node.

v100-32 nodes

- There are 24 Tesla v100-32 nodes. Each has eight V100 GPUs and 32GB of GPU memory per GPU. These nodes have 512GB RAM per node.

- There is one DGX-2 node, with 16 V100 GPUs, each with 32GB of GPU memory. It has 1.5TB RAM.

v100-16 nodes

- There are 9 v100-16 nodes containing eight V100 GPUs, each with 16GB of GPU memory. These nodes have 192GB RAM per node.

The GPU partition

The GPU partition is for jobs that will use one or more entire GPU nodes.

When submitting a job to the GPU partition, you must use these options to specify the number of GPUs you want. Be aware that the way to request a number of GPUs is different, depending on whether you are using an interactive session or a batch job.

If you do not specify the number of GPUs or time limit, you will get the defaults. See the summary table for the GPU partitions below for the defaults.

Interactive sessions

Use a command like

interact -p GPU --gres=gpu:type:n

- In interactive use, n is the number of GPUs you are requesting per node. Because you always use one or more entire nodes in the GPU partition, n must always be either 8 or 16. To use the DGX-2, n must be 16. For all other GPU nodes, n must be 8.

- type is one of h100-80, l40s-48, v100-16, or v100-32

- Because there is only one DGX-2, you cannot request more than one node with -N when using asking for 16 GPUs (i.e., --gres=gpu:v100-32:16).

- Note: Users can no longer use Interact to request more than 1 node.

See interact command options for details on other options, such as the walltime limit.

Sample interact command for the GPU partition

An interact command to start a GPU job on 2 GPU v100-32 nodes for 30 minutes is

interact -p GPU --gres=gpu:v100-32:8 -t 30:00

where:

-p indicates the intended partition

--gres=gpu:v100-32:8 requests the use of 8 GPUs on each v100-32 node

-t 30:00 requests 30 minutes of walltime, in the format HH:MM:SS

Batch jobs

Use a command like

sbatch -p GPU --gpus=type:n -N x jobname

- In batch use, n is the total number of GPUs you are requesting for the job. Because you always use one or more entire nodes in the GPU partition, n must be a multiple of 8, either: 8, 16, 24 or 32, depending on how many nodes you are requesting. To use the DGX-2, use 16 for n and never ask for more than one node.

- type is one of "v100-16" or v100-32"

- x indicates the number of nodes you want to use, from 1-4. If you only want one node, you can omit the -N option because it defaults to one.

- Valid options to use one node are

- --gpus=h100-80:8 to use an H100-80 node

- --gpus=l40s-48:8 to use an L40S-48 node

- --gpus=v100-32:16 to use the DGX-2

- --gpus=v100-32:8, to use a V100-32 Tesla node

- --gpus=v100-16:8, to use a Volta node

- jobname is the name of your job script

See the sbatch command options for more details on available options, such as the walltime limit.

Sample sbatch command for the GPU partition

A sample sbatch command to submit a job to the GPU partition to use 2 full GPU v100-16 nodes and all 8 GPUs on each node for 5 hours is

sbatch -p GPU -N 2 --gpus=v100-16:16 -t 5:00:00 jobname

where:

-p indicates the intended partition

-N 2 requests two v100-16 GPU nodes

--gpus=v100-16:16 requests the use of all 8 GPUs on both v100-16 nodes, for a total of 16 for the job

-t is the walltime requested in the format HH:MM:SS

jobname is the name of your batch script

Sample job script for the GPU partition

#!/bin/bash #SBATCH -N 1 #SBATCH -p GPU #SBATCH -t 5:00:00 #SBATCH --gpus=v100-32:8 #type 'man sbatch' for more information and options #this job will ask for 1 full v100-32 GPU node(8 V100 GPUs) for 5 hours #this job would potentially charge 40 GPU SUs #echo commands to stdout set -x # move to working directory # this job assumes: # - all input data is stored in this directory # - all output should be stored in this directory # - please note that groupname should be replaced by your groupname # - PSC-username should be replaced by your PSC username # - path-to-directory should be replaced by the path to your directory where the executable is cd /ocean/projects/groupname/PSC-username/path-to-directory #run pre-compiled program which is already in your project space ./gpua.out

#!/bin/bash #SBATCH -N 1 #SBATCH -p GPU-shared #SBATCH -t 5:00:00 #SBATCH --gpus=v100-32:4 #type 'man sbatch' for more information and options #this job will ask for 4 V100 GPUs on a v100-32 node in GPU-shared for 5 hours #this job would potentially charge 20 GPU SUs #echo commands to stdout set -x # move to working directory # this job assumes: # - all input data is stored in this directory # - all output should be stored in this directory # - please note that groupname should be replaced by your groupname # - PSC-username should be replaced by your PSC username # - path-to-directory should be replaced by the path to your directory where the executable is cd /ocean/projects/groupname/PSC-username/path-to-directory #run pre-compiled program which is already in your project space ./gpua.out

Summary of partitions for GPU nodes

| GPU | GPU-shared | |

|---|---|---|

| Default number of nodes | 1 | NA |

| Max nodes/job | NA | |

| Default number of GPUs | 8 | 1 |

| Max GPUs/job | 64 | 4 |

| Default runtime | 1 hour | 1 hour |

| Max runtime | 48 hours | 48 hours |

Benchmarking jobs require using one or more entire nodes. Use the RM, RM-512 or GPU partitions to ensure that no other jobs can run on any of the nodes your benchmarking job is using.

Using the DGX-2 for benchmarking

To use the entire DGX-2 node, submit a job to the GPU partition requesting 16 v100-32 GPUs. Use a command like

sbatch -p GPU --gpus=v100-32:16 jobname

Add any other options, like walltime, that you need. See the section of this User Guide on sbatch options for descriptions of other available options.

Using other GPU nodes for benchmarking

To use the entire GPU node, submit a job to the GPU partition requesting 8 GPUs. Use a command like

sbatch -p GPU --gpus=v100-32:8 jobname

or

sbatch -p GPU --gpus=v100-16:8 jobname

depending on the type of GPU node you need.

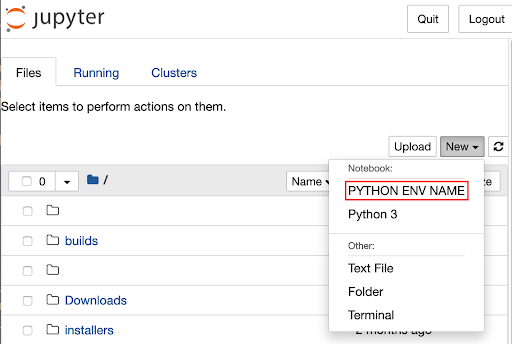

Add any other options, like walltime, that you need. See the section of this User Guide on sbatch options for descriptions of other available options.