COSMO

COSMO: A Research Data Service Platform

COSMO is a platform (web portals, API) for easily sharing large datasets, as well as a set of domain-agnostic recommendations for scientific data sharing that expedite information access without the need to first download the entire dataset.

In this page:

- Best practices recommendations

- Datasets leveraging COSMO

- System design and implementation

- How to share your dataset using COSMO, including limitations, computational requirements, and constraints

- Resources

Best practices recommendations

- Enable the dataset to be browsed

- Allow metadata and the dataset to be downloadable by key segments

- Enable multiple data transfer protocols

- Describe the data fields and data structure

- Support dataset queries via a REST API

Enable the dataset to be browsed

COSMO implements this feature via a data sharing portal, which contains the dataset structure information with different levels of granularity.

Pros

- Researchers can navigate the dataset files and identify what is relevant to them.

Cons

- A structure for the dataset must be implemented for reflecting it over a web portal so researchers can navigate in an intuitive way.

- Descriptions for individual sections and files need to be created so researchers can navigate the dataset.

Allow metadata and the dataset to be downloadable by key segments

Enable data index metadata (MBs) to be downloaded first to identify sections of interest before downloading the main content (GBs, TBs, PBs).

Pros

- Researchers can download parts of the information to quickly determine if it is relevant for their own research.

- Researchers can initiate individual transfers for subsets of the files, which can be resumed if needed, making it easier to access each batch of information faster than downloading a single huge file.

Cons

- The dataset must be structured such that information is usable when downloaded in increments. For example, metadata information should be downloadable per iteration so researchers can examine it to decide if they want to download the rest. This concept can be used for splitting the dataset into smaller chunks, allowing for efficient data download.

- Descriptions for each section need to be solidified so researchers can download the right files.

Enable multiple data transfer protocols

Enable different options for transferring data. For example, use Anonymous Globus endpoints for supporting direct (HTTP) downloads, and use regular Globus collections for allowing automatic transfers to everyone using a Globus Connect Personal account.

Pros

- Researchers accessing the data have more options to download the data based on their specific environment and resources.

Cons

- Multiple resources have to be installed, configured, and maintained for the full services to be up and running.

Describe the data fields and the data structure

By sharing description of the data structures and the relationship between data fields, the users are equipped to access the data efficiently.

Pros

- Users better understand the data fields and the relationships between them.

- Researchers will need less hands-on support for using datasets.

- Thorough documentation eases the burden of knowledge transfer when team members are leaving a project.

Cons

- It is time consuming to write a thorough description of every field in a dataset.

- Any changes that are made to the dataset must also trigger an update for the documentation of all fields modified.

Support dataset queries via a REST API

This approach allows researchers to browse and interact with the data via a commonly used programmatic protocol. COSMO includes data endpoints, instructions, tutorials, and a website that allows users to directly query the API from the browser.

Pros

- Researchers are not required to download the dataset to start inspecting the dataset and obtain preliminary results.

- The REST API is programming-language-agnostic so researchers can interact with the information and produce results using the tools they are already comfortable with.

- No storage is needed for transferring and hosting the dataset because the REST API will locally access the remote data and only return the results produced.

Cons

- It takes time to design, implement, and deploy the REST API.

- Depending on the dataset, performance tuning and/or parallelization techniques may be needed to keep response times short.

Datasets leveraging COSMO

These datasets are currently using COSMO or are being configured to do so. Before and after screenshots are provided for showing the impact COSMO has.

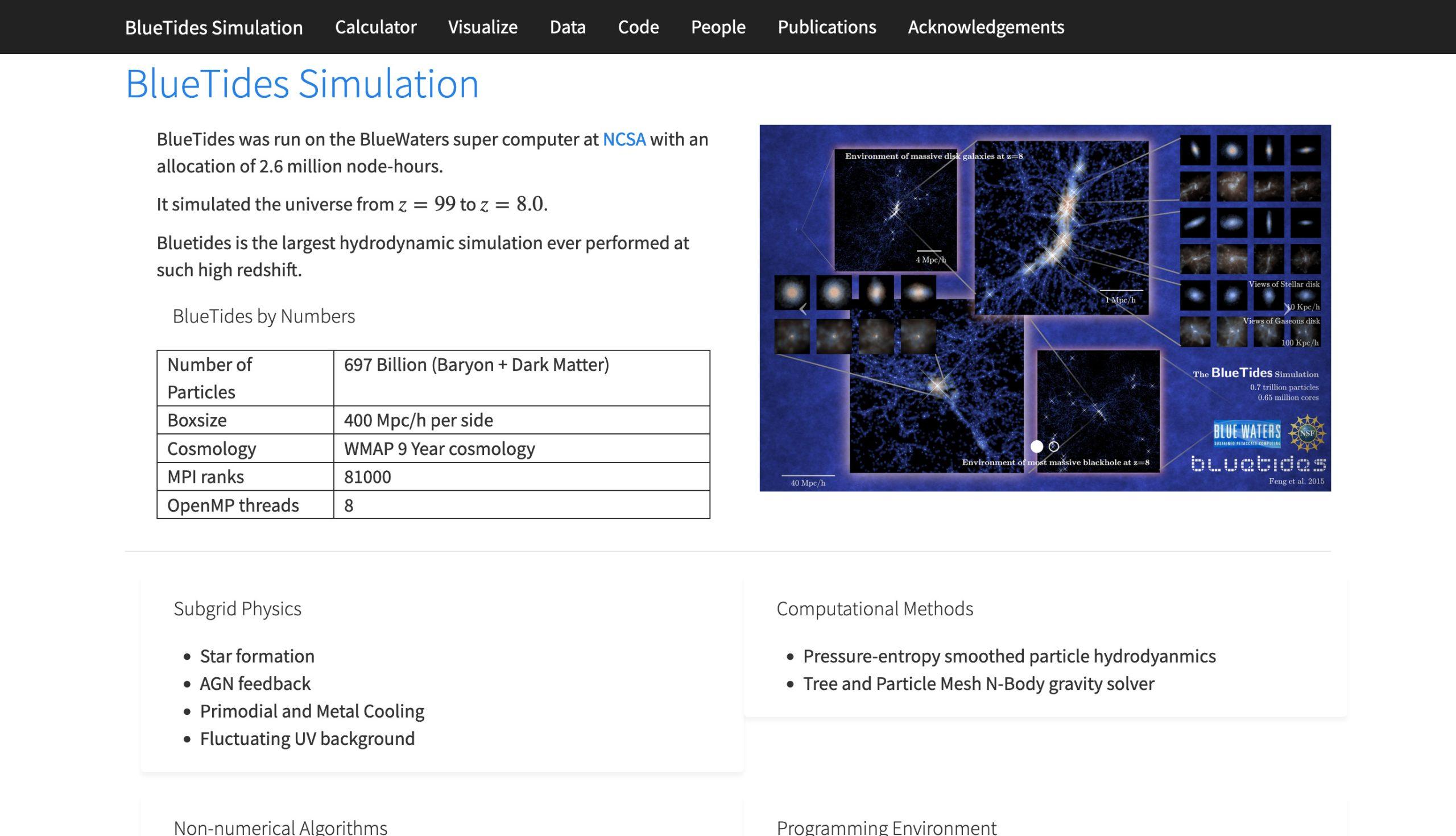

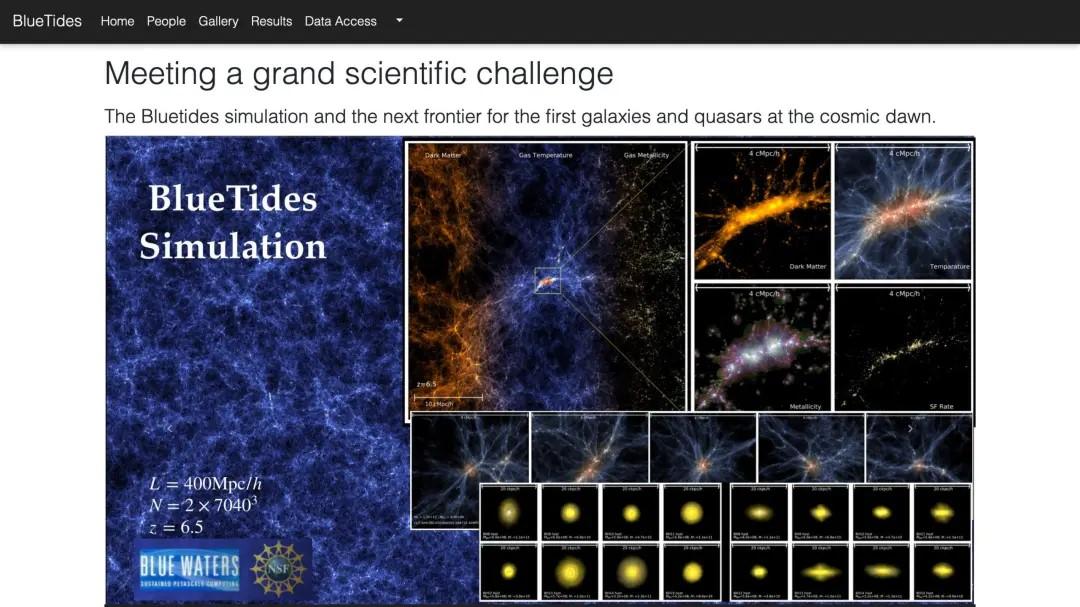

BlueTides

The BlueTides simulation project aims to understand how supermassive black holes and galaxies formed in the first billion years of the universe’s history using one of the largest cosmological hydrodynamic simulations ever performed: enclosing a box with the side of 400 cMpc/h and a total of 0.7 trillion simulated particles.

BlueTides home page: https://bluetides.psc.edu/

Source code: https://github.com/pscedu/cosmo/tree/main/bluetides-landing-description-portal

Visual comparison before and after adopting COSMO:

Before

After

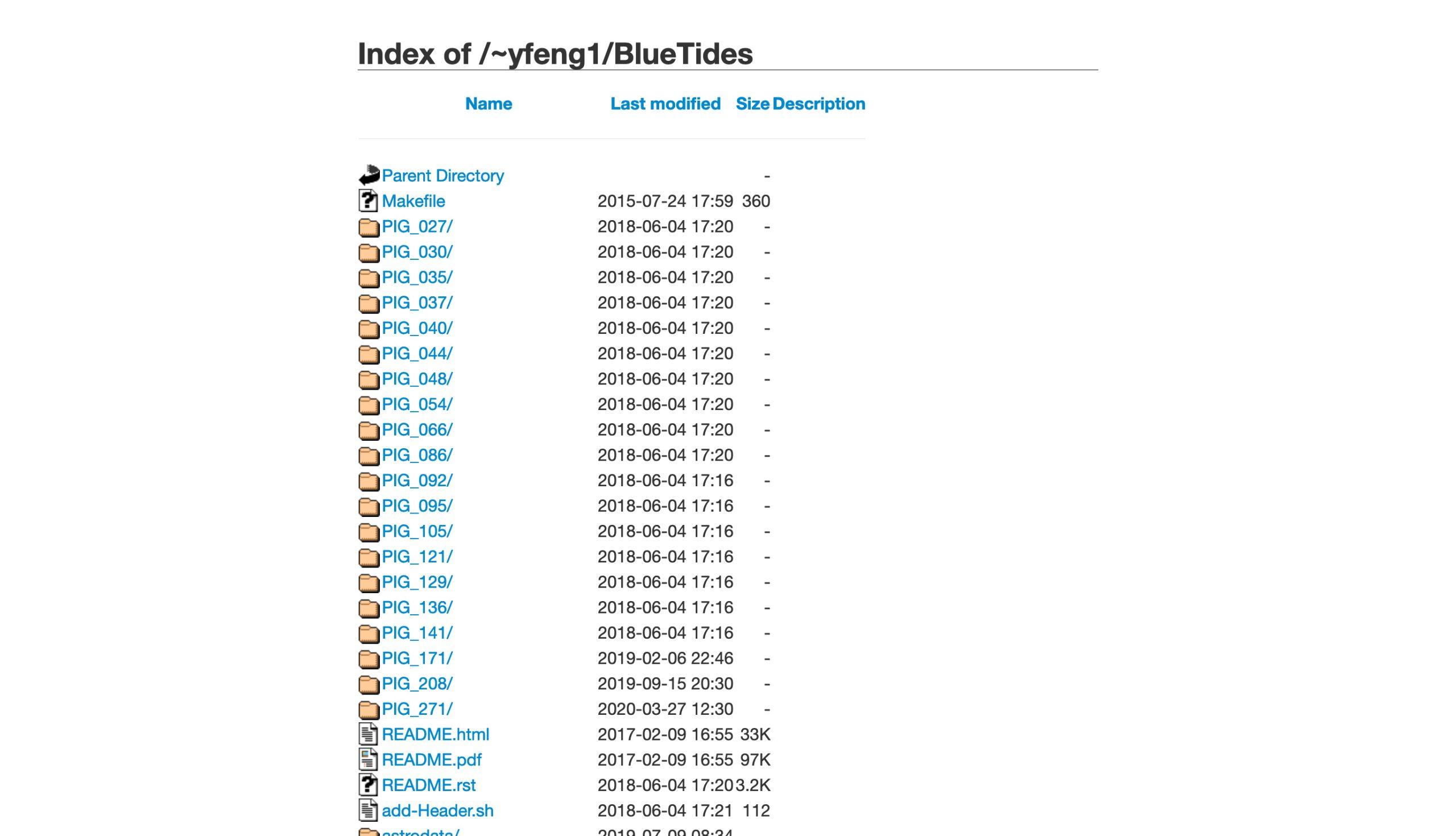

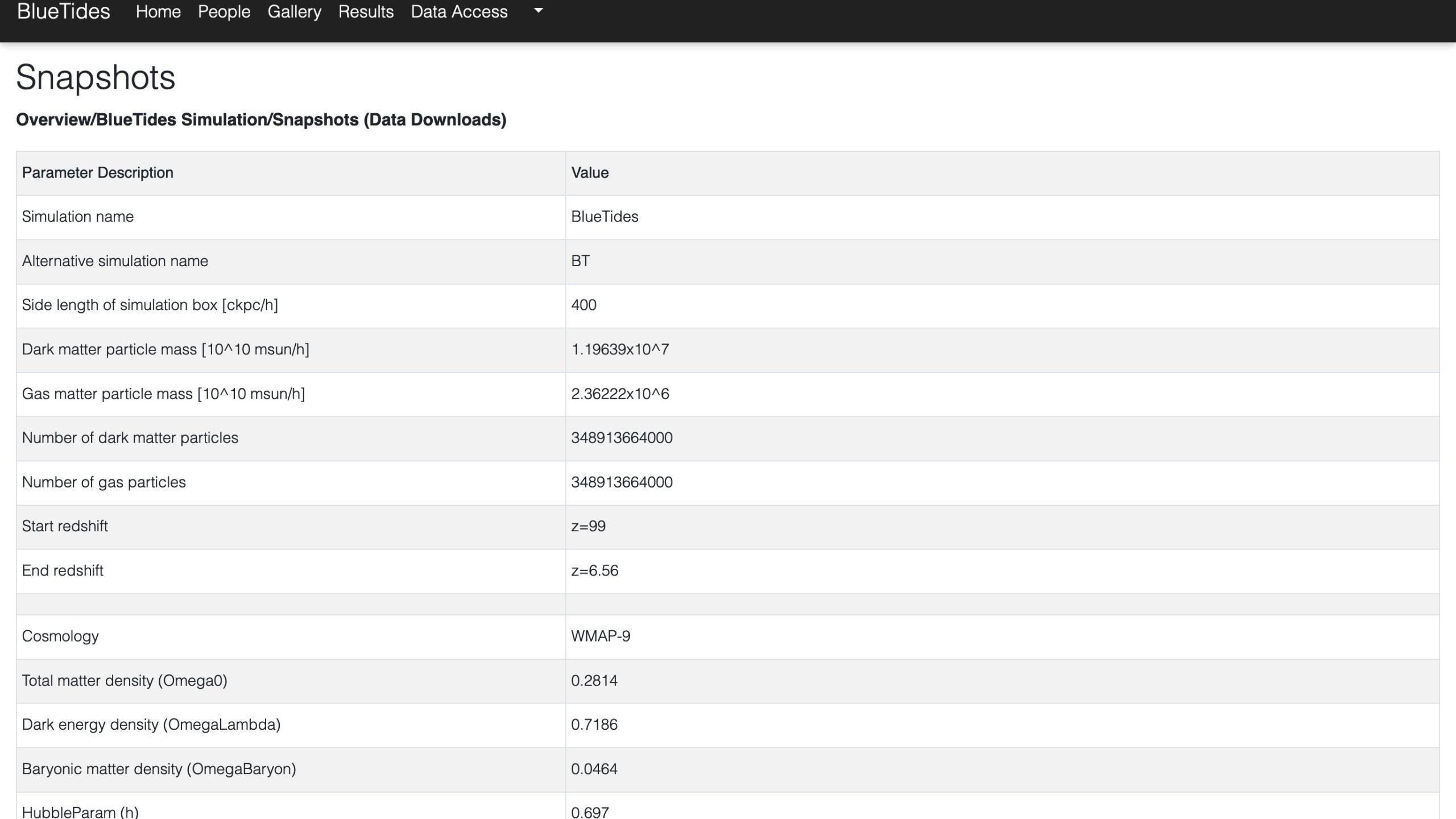

Data sharing

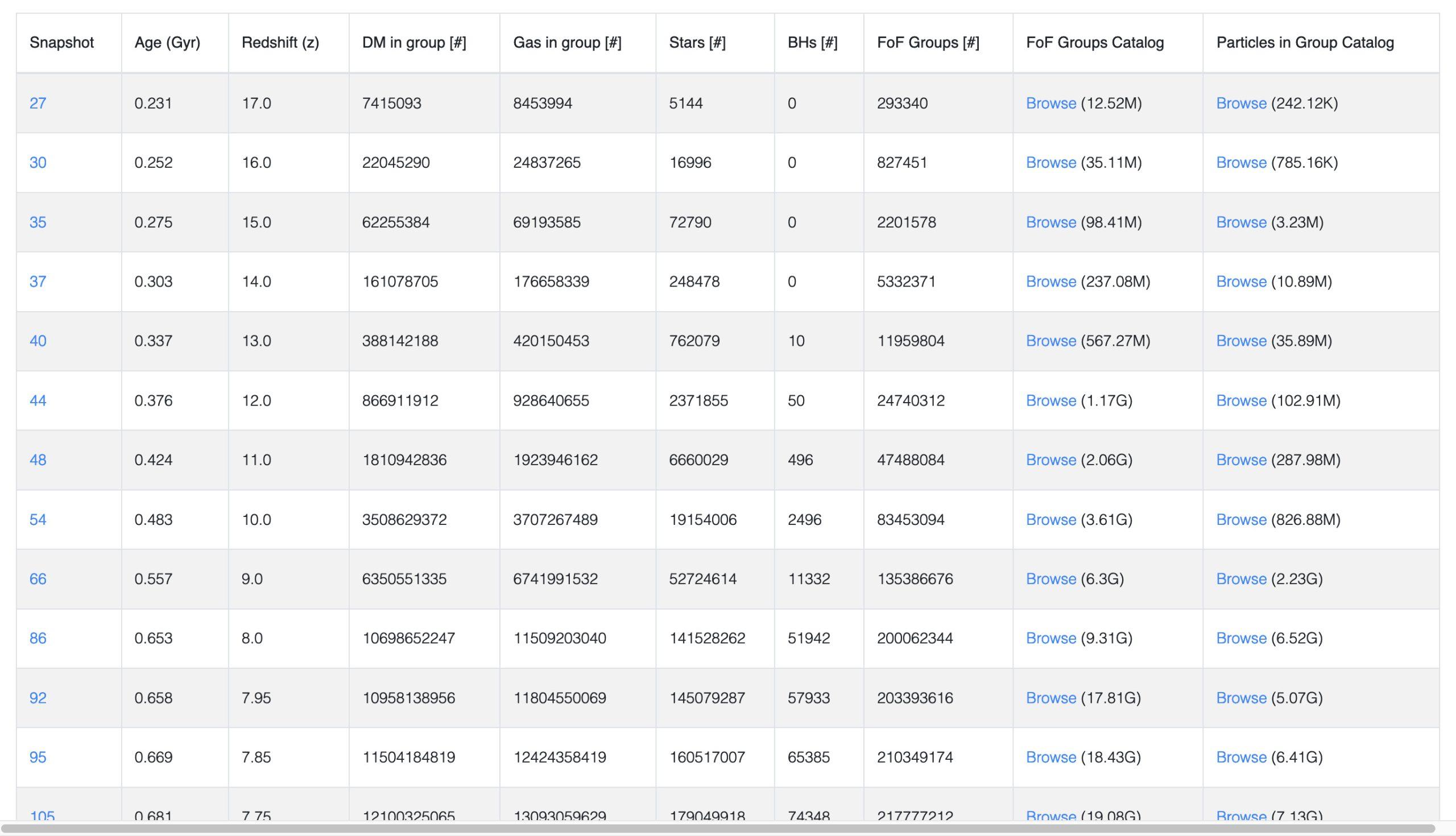

The BlueTides simulation dataset files comprise the following three types of data: Simulation snapshots, Friends-of-Friends (FOF) groups catalogs, and Particles in group (PIG) catalogs. The snapshot data is organized in blocks containing information about each type of particle — dark matter, gas, star, and black hole. The FOF and PIG catalogs contain properties about the gravitationally-bound particle groups (halos) and their member particles identified by the FOF group finder algorithm.

Live data-sharing portal: https://bluetides-portal.psc.edu/

Source code: https://github.com/pscedu/cosmo/tree/main/bluetides-data-sharing-portal

Visual comparison before and after adopting COSMO:

Before

After

Before

After

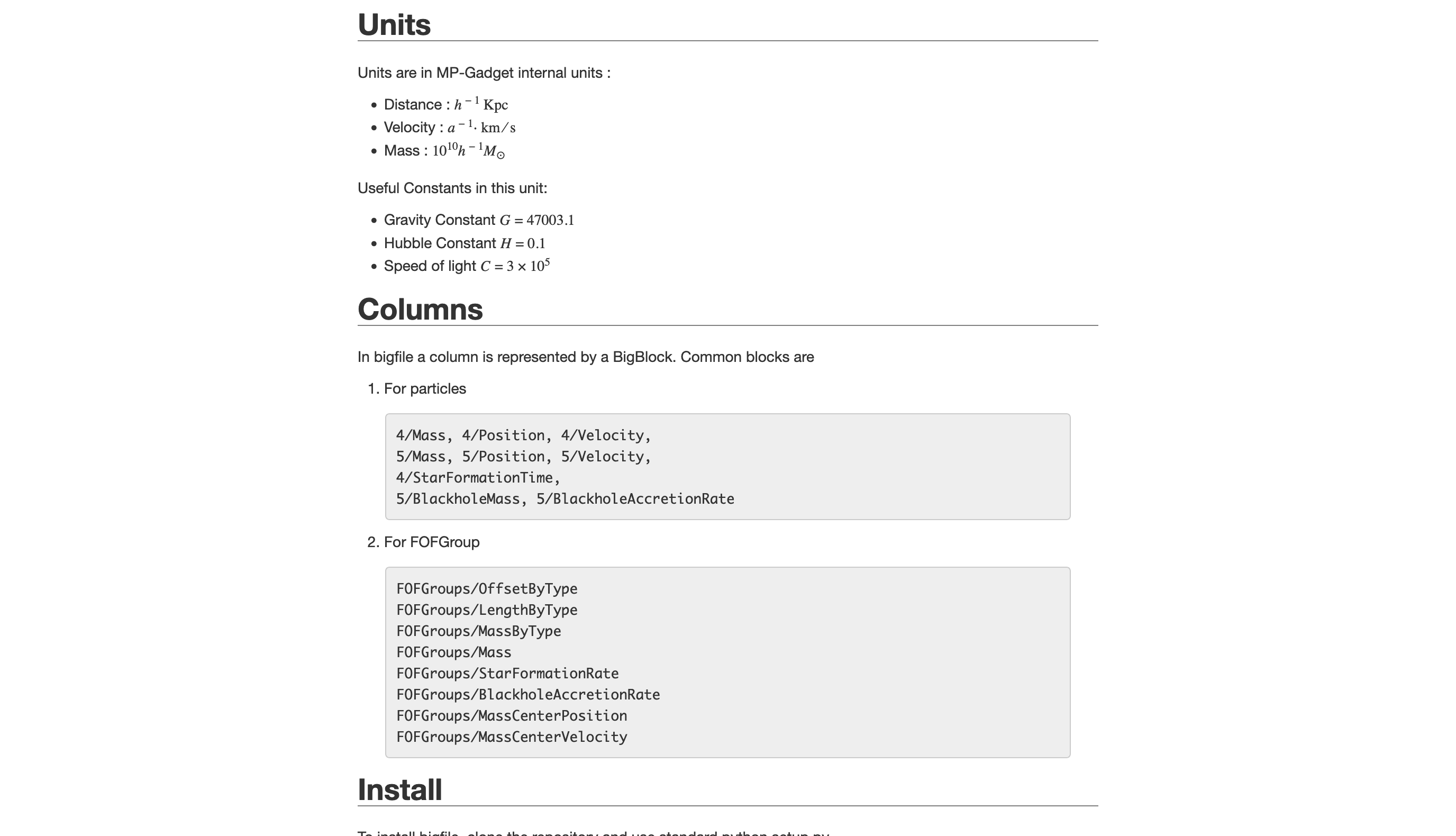

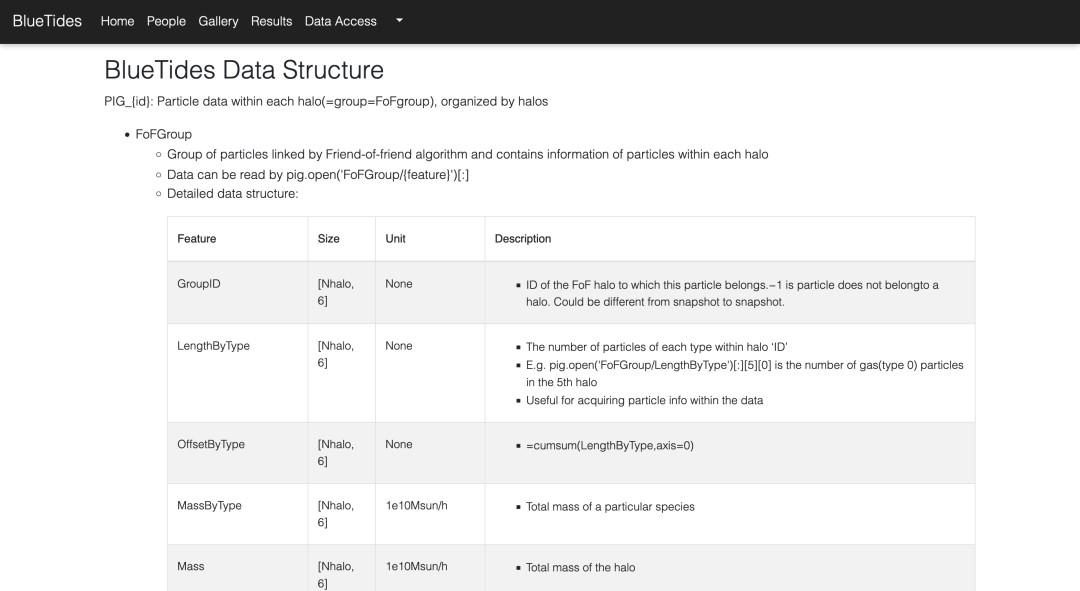

Data structure description

A description of the BlueTides dataset fields is available as an individual page, providing clarity regarding each of the fields available and the relationships between them.

BlueTides Data Structure: https://bluetides.psc.edu/data-structure/

Source code: https://github.com/pscedu/cosmo/tree/main/bluetides-landing-description-portal

Visual comparison before and after adopting COSMO:

Before

After

API

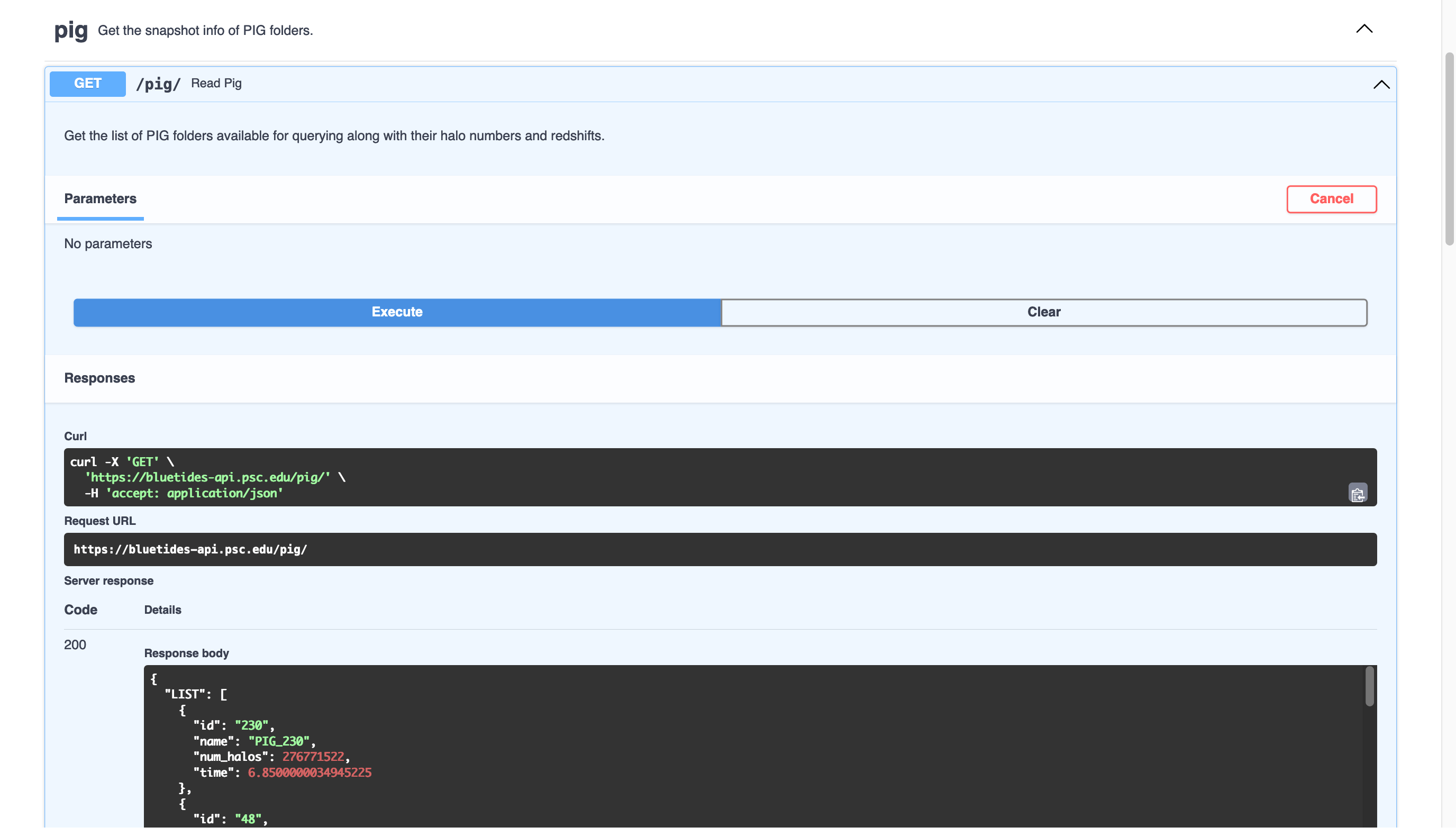

The REST API for BlueTides was written using the FastAPI framework to provide users a convenient way to access key subsets of simulation data. The API endpoint design follows the data structure of the BlueTides simulation data. The canonical endpoints have a top-down structure as the data catalogs; the advanced queries allow advanced search by specifying criteria for bulk queries.

API Frontend (interactive API documentation): https://bluetides-api.psc.edu/docs/

API Reference: https://bluetides.psc.edu/api-reference/

API Tutorial: https://bluetides.psc.edu/tutorial/

API URL (API URL for querying data): https://bluetides-api.psc.edu/

Source code: https://github.com/pscedu/cosmo/tree/main/bluetides-api

Visual comparison before and after adopting COSMO:

Before

(an API was not available prior to COSMO)

After

ASTRID

This is a new dataset that is being made available through COSMO. It is still a work in progress.

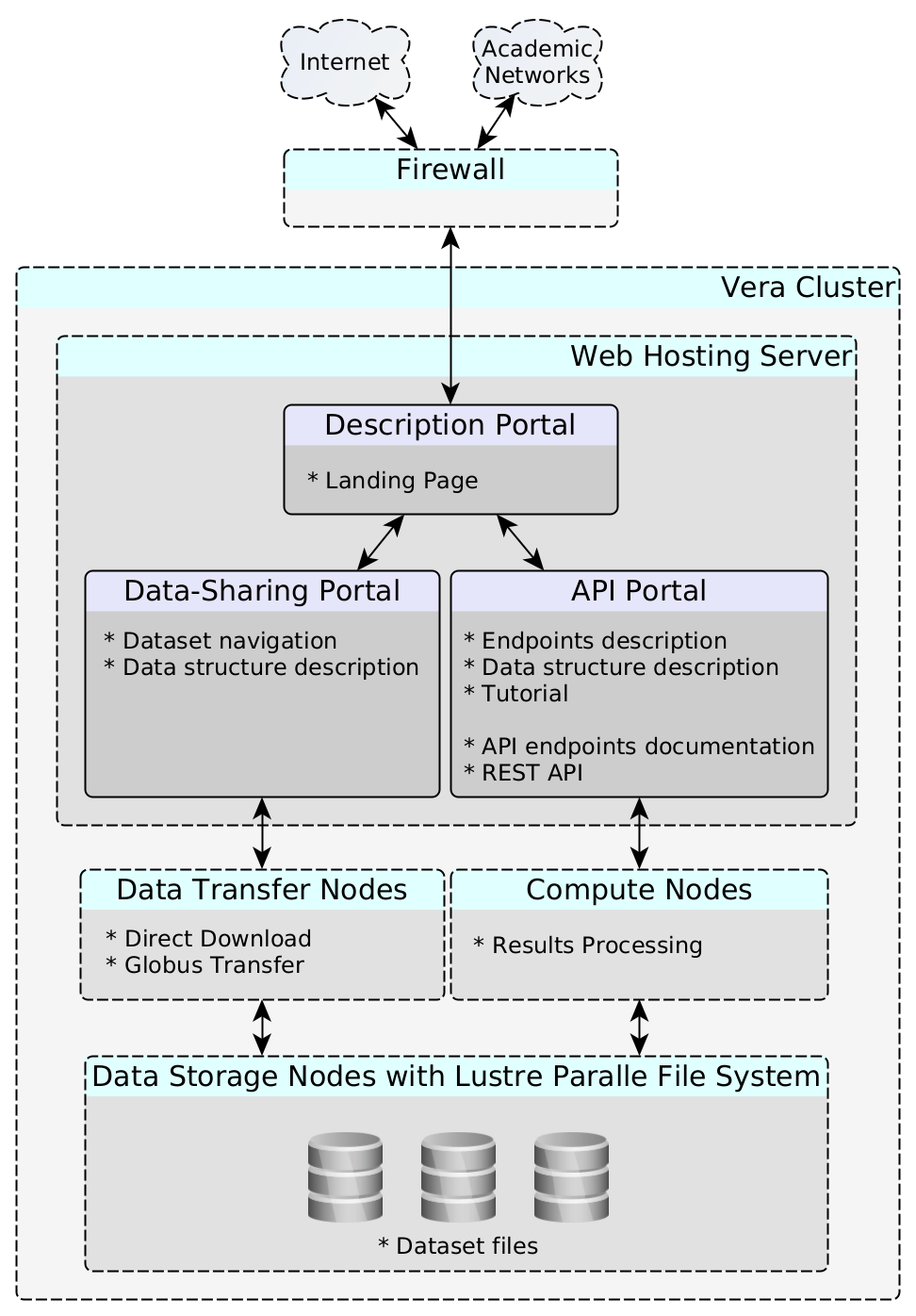

System design and implementation

This diagram shows the structure of COSMO implemented on top of an example cluster. The main components are:

Landing page/description web portal: It contains general information about the dataset and project and also works as an entry-point to navigate to other sections, such as team member introduction (People), publications (Results), and a gallery page to showcase the simulation results (Gallery).

Data sharing web portal: This component provides an overview of the data information and individual access to the data files via Globus endpoints (Data Access). Globus, which provides features such as automatic data-integrity checks and options for resuming data transfers after disruptions, provides a reliable file transfer solution for large files

API portal: This component includes the API Reference and API Tutorial, which contain the API endpoint descriptions and a tutorial for utilizing the API tools via Python scripts, respectively.

Limitations

You need to code the API for it to support your dataset.

Computational requirements

Disk storage

- Decompressed dataset (bigfile, easily usable by multiple workers)

- Transfer-optimized files for downloads (zip files, bundle of the decompressed files)

- Example for a 5TB dataset:

- Decompressed dataset (5TB)

- Transfer-optimized files (The percentage of the data that you would like to make available)

Constraints

You must have disk storage space for the full dataset, including both transfer-optimized and input/output-optimized files.

Resources

These repositories contain the source code to implement the COSMO recommendations and best practices for serving the BlueTides Simulation as a proof-of-concept dataset:

- Main COSMO repository: https://github.com/pscedu/cosmo/

- Landing-page/Description Portal Source code: https://github.com/pscedu/cosmo/tree/main/bluetides-landing-description-portal

- Data-sharing Portal source code: https://github.com/pscedu/cosmo/tree/main/bluetides-data-sharing-portal

- API Portal source code: https://github.com/pscedu/cosmo/tree/main/bluetides-api

- Research article “COSMO: a Research Data Service Platform and Experiences from the BlueTides Project“, at PEARC’22: Practice and Experience in Advanced Research Computing.