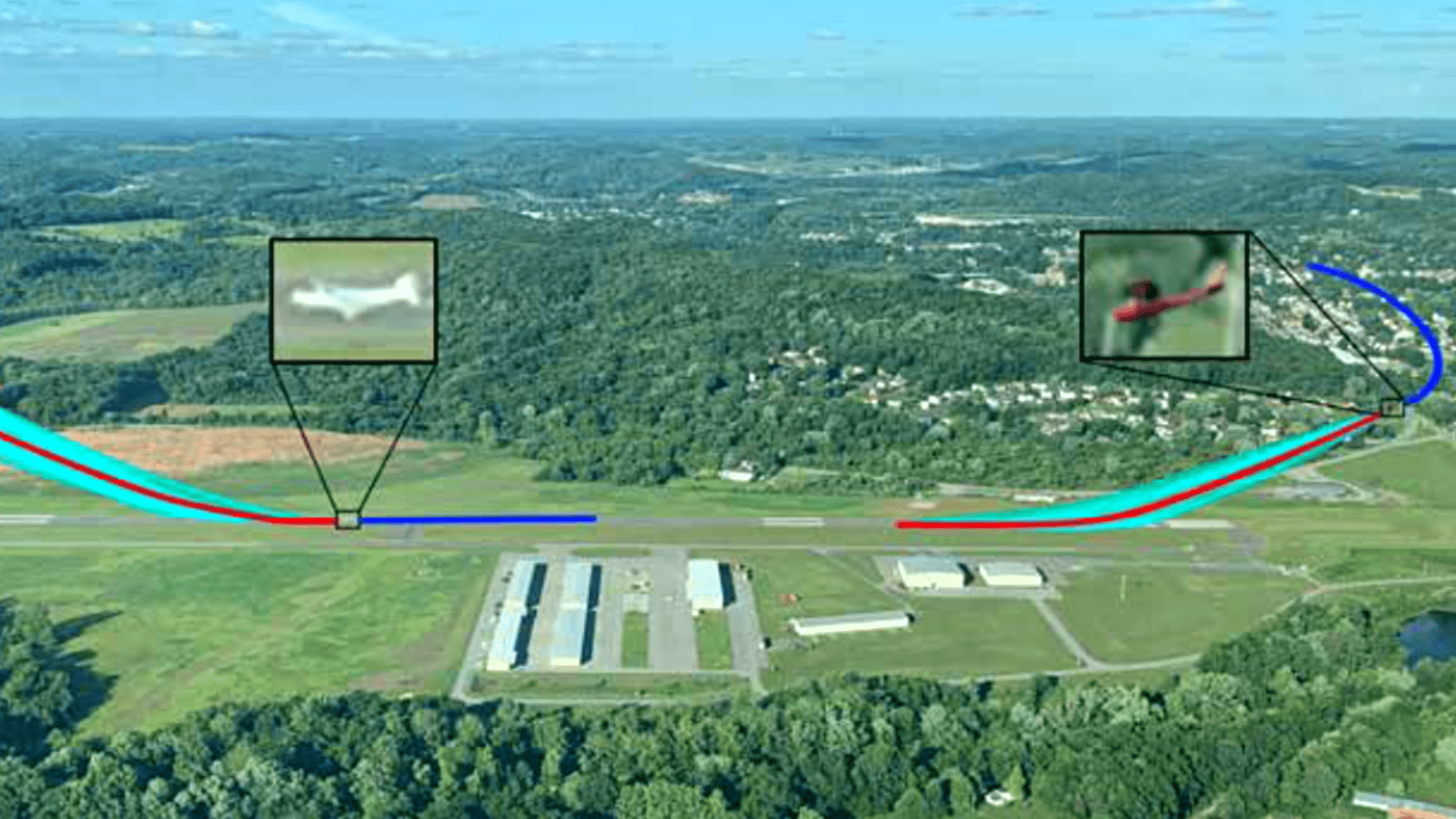

TrajAir dataset includes recorded ADS-B trajectories of aircraft interacting in a non-towered general aviation airport and the weather context from METAR strings. TrajAirNet predicts multi-future samples (cyan) of trajectories for all agents by conditioning each on the history (blue) of all the agents and the weather context.

Bridges-2-Developed AirDet Approach Removes Requirement for Extensive Retraining in Applying AIs to New Jobs

Artificial intelligence is great at doing specific tasks. But AI algorithms tend to be inflexible, not able to pick up new jobs without extensive re-refinement. The AirLab team at Carnegie Mellon University’s Robotics Institute has used PSC’s Bridges-2 system to develop a series of approaches that can allow a robot to pick up new capabilities without such time- and computation-expensive retraining.

WHY IT’S IMPORTANT

Artificial intelligence, powered by a method called deep learning, has transformed our world in ways we often take for granted. We shop, search, socialize, learn, and even drive our cars thanks to sophisticated deep learning algorithms — software instructions that the AI constructs by learning from large amounts of human labeling and by trial-and-error training. Deep learning AI tools do what they were designed to with a speed and accuracy that was inconceivable 10 years ago.

The trick lies in “what they were designed to do.” Among the challenges in making AIs more accurate and more useful is that they’re hyper-specialized. A given algorithm can be tuned to a specific job to the point at which it’s as good or better than a human at that job. But unlike the human, give that algorithm a different job it’ll likely fail abysmally, at least without extensively re-training it on the new job.

“We’re really trying to see whether we can learn how to generalize our models, both from simulated datasets and real-world datasets … We were the first researchers showing a visual-odometry model, which is used to track the robot’s self motion, training purely from simulation data could be used in real-world navigation tasks.” — Wenshang Wang, CMU AirLab

A team from the AirLab at CMU’s Robotics Institute wanted to create AI algorithms that are far more flexible. They aimed for a set of generalizable algorithms that could produce AIs that are able to pick up new tasks reasonably well with minimal retraining. To create these algorithms, which they named AirDet, Sebastian Scherer, head of AirLab, and his students and colleagues at CMU and elsewhere used PSC’s Bridges-2 supercomputer.

HOW PSC HELPED

AIs, the AirLab scientists realized, can reach a moderately accurate level of performance relatively quickly. But refining that performance to acceptable levels takes fine-tuning that depends on a lot of computation. To design a specialized algorithm is easier and computationally cheaper. But a specialized algorithm is not flexible enough to deal with unexpected cases when applied to a new task. The AirLab scientists wanted to design general robots instead of designing them task-by-task, which is hard to scale up. Not that crafting AirDet in the first place was going to be computationally “cheap.” The project would require data at the 100-terabyte scale — a hundred times more than a new laptop’s storage — as well as around a thousand machine-days of computational power. This was more than what was available through their local cluster computing system. The NSF-funded Bridges-2 was able to supply that kind of raw power. But Bridges-2 also offered a level of customizability that was ideal for the task. The team could easily apply different levels of computational power and different types of processors, jumping from number-crunching central processing units (CPUs) to relationship-analyzing graphics processing units (GPUs), for example, with ease. Better, when COVID19 made access to everything more difficult, team members found it easy to connect and work with Bridges-2 from wherever they needed.“We can, depending on the model size and different applications, choose how much computational power we want to use … [including] GPU resources. It’s very flexible and customizable … People in the [Bridges-2] team also gave us great support; they’re very responsive whenever we have questions.” — Wenshang Wang, CMU AirLab

The team has published several papers reporting early successes with their new approach:

- The team produced a dataset, called TrajAir, that captures the behavior of pilots approaching airports without control towers. The dataset allowed the scientists to produce TrajAirNet, an algorithm that predicts the movement of other aircraft in three dimensions and in the contexts of different airports and weather conditions. The work will be fundamental for ensuring safe robotic approaches and landing in smaller airports. The AirLab team reported these results at the IEEE International Conference on Robotics and Automation in May 2022.

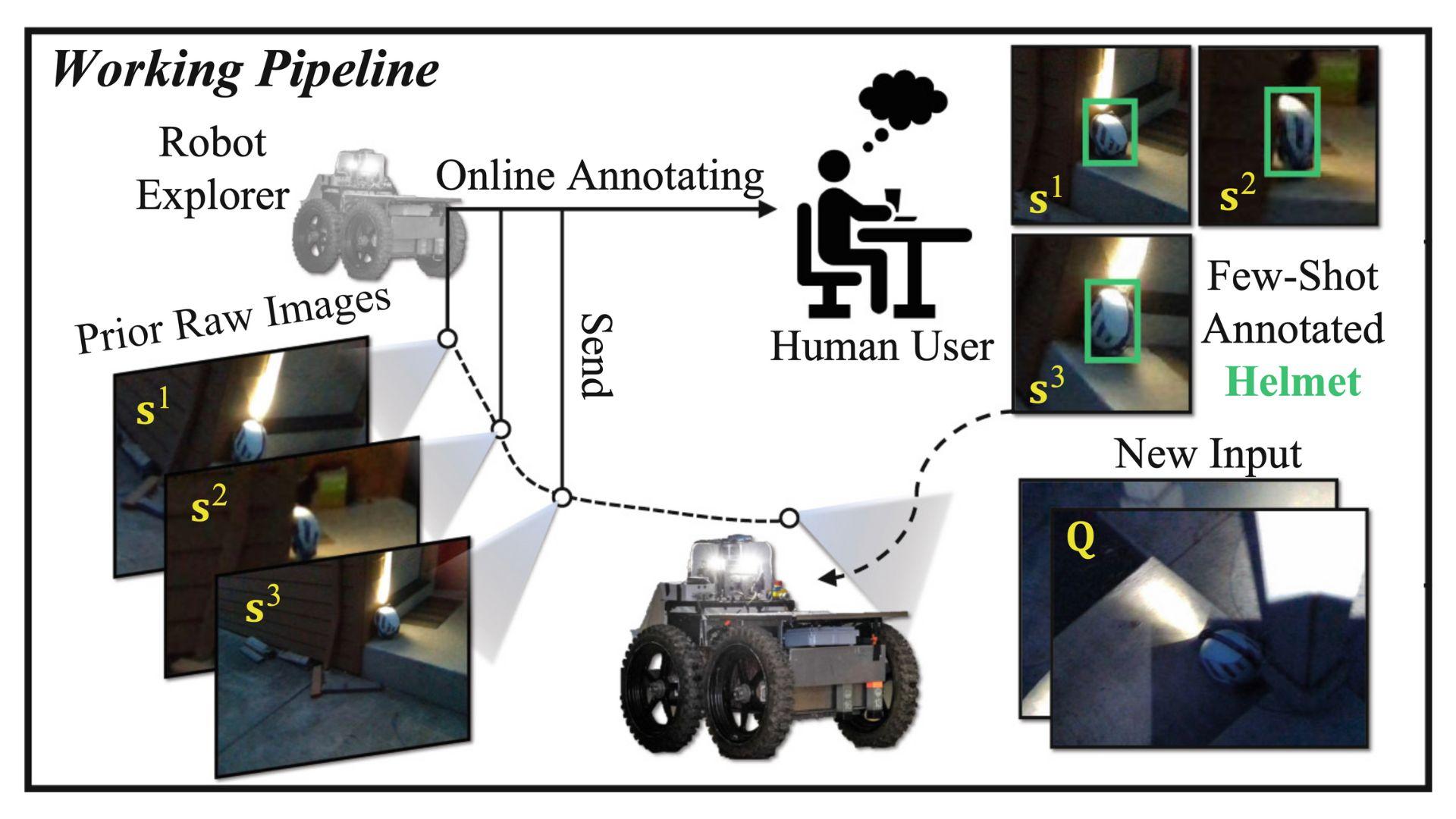

- The team entered a robot in the U.S. government-sponsored DARPA Subterranean Challenge. Using the AirDet-derived FewShot algorithm, their robot navigated an underground obstacle course without human guidance, showing up to 30-40% improvement over unrefined AIs and matching the performance of many that had been specifically fine-tuned for the task. Importantly, the algorithm was able to send images of objects it didn’t recognize to a human observer, who could then tag them so the AI would recognize them when next encountered. The scientists reported their results at the European Conference on Computer Vision in October 2022.

- They tested AirDet in tracking moving objects on the ground from multiple small aerial drones. The application, which required 3D reconstruction of a noisy natural environment, overcame issues like trees and terrain blocking a drone’s view and uncertainties in each drone’s position. The algorithm is promising for applications as different as recording sports and entertainment events, searching for lost people, detecting tumors, and monitoring the movements of endangered species in the wild. They reported the work at the IEEE/RSJ International Conference on Intelligent Robots and Systems in October 2021.

- Among other projects, the team published a large-scale, multi-modal dataset called TartanDrive to pilot an all-terrain vehicle in navigating an off-road obstacle course without human guidance. This work was the subject of a paper in the IEEE/ISJ conference in May 2022.

Next, the team is working on developing models that don’t require human labeling and annotation, which current machine learning models typically need. Instead, these new AIs will be able to learn from their own experiences in interacting with the environment in an efficient and safe way.

The pipeline of the autonomous exploration task and the framework of AirDet. During exploration, a few prior raw images that potentially contain novel objects (helmet) are sent to a human user first. Provided with online annotated few-shot data, the robot explorer is able to detect those objects by observing its surrounding environment.