Bridges Simulations Uncover Shortcoming in Models of Early Universe

Scientists Propose “Hybrid Approach” to Correct Key Simplification, Allowing Accurate Simulations of Galactic Evolution

The origin of the Universe—and its galaxies—is an enormous, and enormously complicated, topic. Simulations of the process are vital to astronomers trying to determine what to look for in their new radio-wave and visible-light telescopes. But even with powerful supercomputers, cosmologists conducting these simulations need to make simplifications to carry out the work at all. Scientists from McGill University and the University of California Berkeley used PSC’s Bridges system to conduct a massive series of simulations showing that a key simplification, that the masses of the dark-matter halos that surround galaxies single-handedly determine the properties of the stars in those galaxies, isn’t quite correct. The team proposes a “hybrid approach” that will eliminate this error in upcoming surveys of early galaxies.

Why It’s Important

There is no bigger topic in science than “Everything.” Cosmologists are eagerly awaiting the launch of a series of space- and ground-based telescopes that promise a clearer window into the beginning of the Universe 13.7 billion years ago. These new research tools will enable a survey of galaxies at the farthest distances possible, in particular with a much wider field of view of a kind of astronomical signal called the “21-centimeter microwave radiation.” This signal, named for its wavelength, comes from the hydrogen in the intergalactic medium surrounding galaxies. The work will improve our information on the “reionization” period that started 150 million years after the Big Bang. This was when the light from the first stars blew apart the hydrogen atoms surrounding them, making the soup of the dark early Universe transparent to visible light. Because of that it’s sometimes called the “Cosmic Dawn.”

One problem is that the new telescopes won’t all be taking simple pictures that human eyes can immediately understand. To make sense of the incoming data scientists need to simulate galaxy formation so that the astronomers understand what they’ll be seeing with the new telescopes—and what to look for. But even the most powerful supercomputers on Earth can’t simulate every component of the early Universe in complete detail. So cosmologists conducting the simulations have to make simplifications.

“We tend to think of galaxies in pretty simple terms, and we often describe them in terms of how many stars they have, are they forming stars now, and then maybe some other quantities, like how much gas … And it’s just a few numbers. Obviously in reality, galaxies are very complicated. That’s part of the motivation for [our simulations].”—Jordan Mirocha, McGill University

The most popular of these simplifications involves halo mass. Scientists often assume that the total mass of the dark-matter halo surrounding each galaxy would more or less tell them what kind of galaxy it harbored. But Jordan Mirocha of McGill University in Canada and Paul La Plante of the University of California Berkeley wondered whether this assumption was entirely correct. To test the idea, they turned to the Bridges advanced resarch computer at PSC.

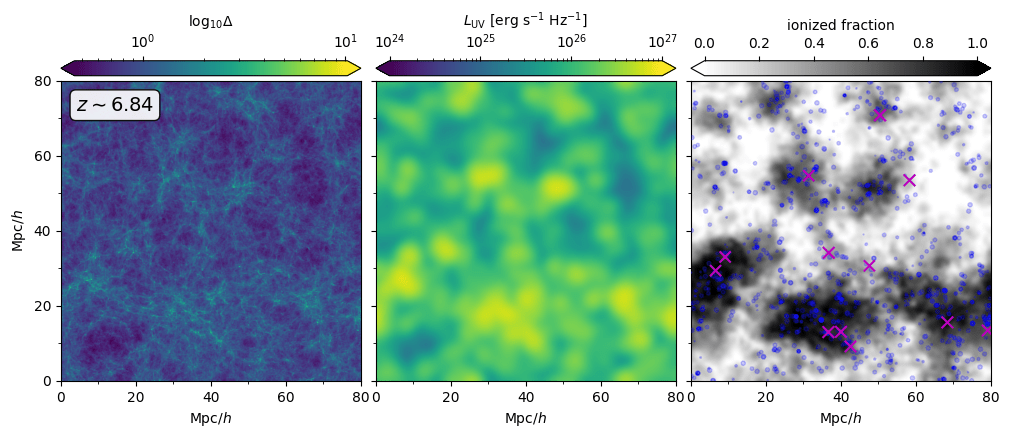

Key simulated observational targets during the “cosmic dawn.” Galaxies form mostly in areas of higher matter density in the early universe (bright areas, left). Even low-resolution maps of the near-infrared background radiation can detect these areas (bright areas, center). Light from new stars then carve out large ionized regions with reduced 21-cm radiation (black areas, right), and which are home to many galaxies bright enough to be detected with the new infrared teescopes coming online.

How PSC Helped

To examine the validity of halo mass as a stand-in for galactic structure, the collaborators needed to conduct massive computations. They set out to run simulations of thousands of halos whose development unfolded by random chance to see whether similar halos always host similar galaxies.

The calculations would require a lot of computational power. But they’d also need to store a lot of data on each “particle”—essentially, chunks of space as small as possible given computational limitations—at every simulation step.. To avoid movement of these data within the computer causing a traffic jam, they’d need a supercomputer with a lot of memory—the same as RAM in a PC. That way, the data could be stored in one place and immediately available to the computer’s processors. Bridges, with its massive 12-terabyte-RAM nodes (each with about a thousand times more memory than a respectable laptop), was perfect for the work. PSC’s support staff also proved key, with the scientists citing help from T. J. Olesky, user engagement specialist, and Tom Maiden, manager of user services, as invaluable in getting their code humming on Bridges.

“[Our simulations consist of many] ‘particles in a box,’ usually on the order of 10 billion or so. You can highly parallelize the code [to speed the calculations], but you also have a high memory requirement just because you have 10 billion numbers you’ve got to keep track of. So the way the code is written, we were restricted to large shared-memory machines rather than distributed memory … We were using the extreme large memory partition of Bridges [to enable] very high-resolution simulations that required … somewhere between 10 and 11 [terabytes of RAM]. At the time, Bridges was one of the only machines in the world that I knew of that I could get time on, and could handle it.”—Paul La Plante, UC Berkeley

The results of the team’s simulations validated their suspicions about whether assuming mass of halos would tell all about their galaxies. They found that, unlike many cosmologists had expected, the brightest galaxies in their simulations were not always surrounded by the largest halos. Many of them had relatively small halos that were rapidly growing and so forming many stars, but which would look like a much bigger halo growing at an unremarkable rate when observed at one point in time. If scientists hadn’t taken this possibility into consideration, it could result in a confusing contradiction between future 21-cm measurements and galaxy surveys with new visible-light telescopes such as the upcoming Next Generation Space Telescope or the Roman Space Telescope.

Because tracking the formation histories of halos is computationally demanding, the collaborators instead recommend a “hybrid approach,” in which only galaxies bright enough to be seen by the NGST are simulated in detail, while the dimmer galaxies are included as a kind of general background signal. The approach would do a better job of guiding the new 21-cm and visible-light telescopes probing deeper distances and wider swaths of the sky. The scientists reported their results in the journal Monthly Notices of the Royal Astronomical Society in September 2021.