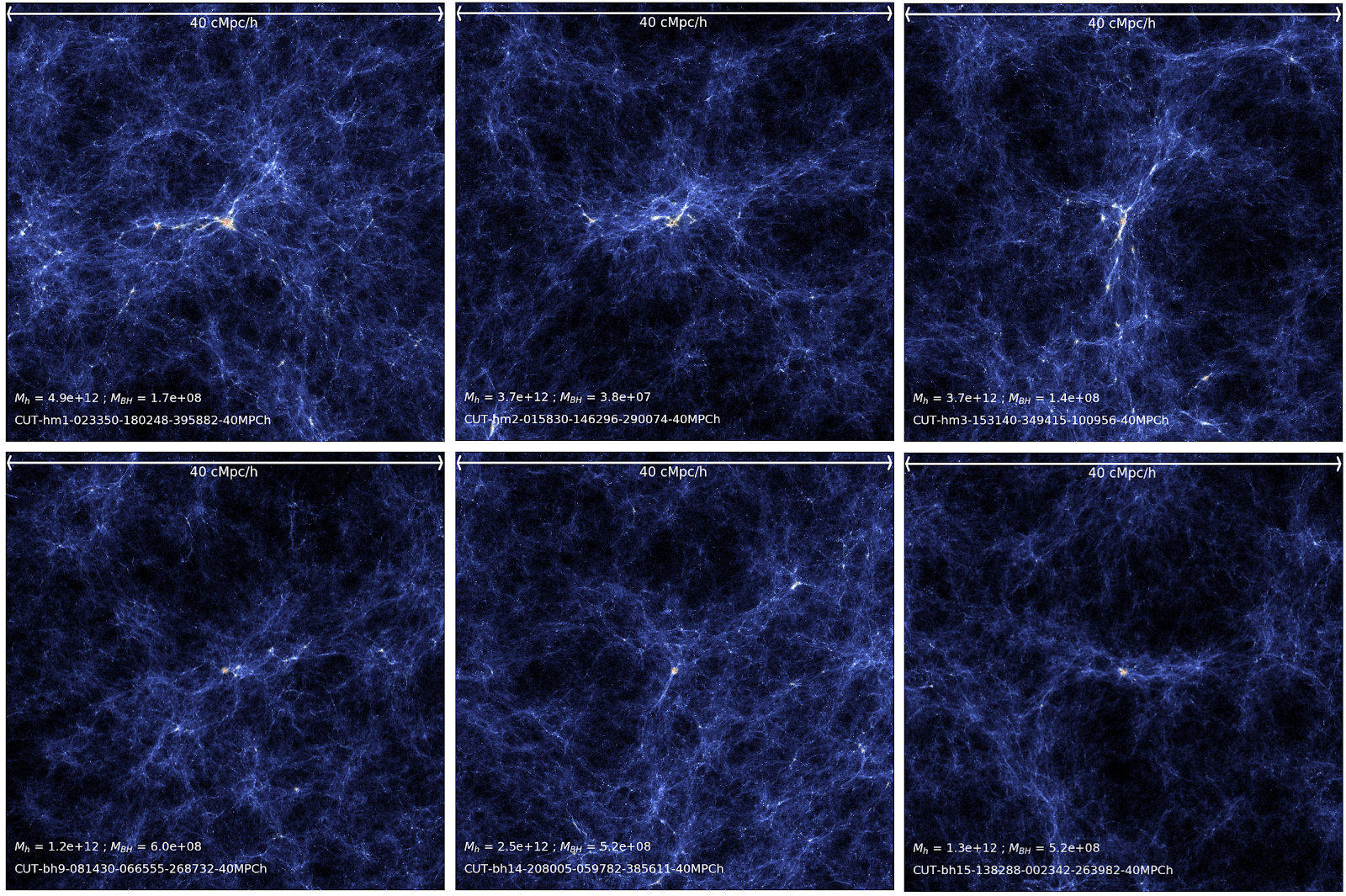

A BlueTides simulation of galaxy formation (centered on massive dark matter halos, top row, and on massive black holes, bottom row) at a distance from Earth so far away that the light would be roughly 12 to 13 billion years old. The image shows the gas density field color coded by temperature.

Designed by PSC and McWilliams Center Scientists, COSMO Allows Researchers to Interact Quickly with Vast Sim of Early Universe

Over its life the James Webb Space Telescope will represent a more than $10 billion public investment. So scientists would like to use that powerful instrument efficiently. An important tool for deciding how to use the telescope is computer simulation. But simulations of the Universe become so large that they’re difficult to share and use. A collaboration between PSC’s Artificial Intelligence and Big Data team and scientists at the McWilliams Center for Cosmology at CMU has produced COSMO. This software tool draws on the power of PSC’s NSF-funded Bridges-2 supercomputer to make data and computation on the CMU team’s BlueTides Simulation more accessible to remote scientists.

WHY IT’S IMPORTANT

NASA’s James Webb Space Telescope holds the promise of opening vast new expanses of the Universe to our understanding. It also represents $8.8 billion of taxpayer money spent to date, with a further $861 million to support an expected five years of operation.

No surprise, then, that scientists want to get every bit of bang (not to say Big Bang) they can out of those bucks! When they “aim” this amazing instrument, they want to be sure to make the odds of discovery as favorable as they can. Equally important is that scientists have access to the incredible mountains of data coming from simulations designed to give them an idea of what to look for.

“Dr. Di Matteo had a data set that was quite big. And that data set is BlueTides. [Her team] initially had a web page [with] a list of files … The idea is that she just wanted a way to be able to centralize all of [it] … so people could see what are the things that they should be doing or working on [and] to be able to share these kinds of data sets in a better way.”

— Julian Uran, PSC

The BlueTides Simulation project, one of the biggest treasure troves of simulated data, is a vast artificial universe created with the BlueWaters supercomputer at the National Center for Supercomputing Applications. Created by Professor Tiziana Di Matteo’s team at CMU’s McWilliams Center for Cosmology in collaboration with PSC experts, BlueTides is a valuable tool for maximizing the Webb Telescope’s success.

There is a problem with BlueTides, though. It’s enormous. To find the data most useful for their work, scientists had to search for a kind of “needle in a needlestack.” And those needles, if you’ll forgive the nasty mixed metaphor, are coming out of the business end of a fire hose.

To help researchers across the country and the world tame the data tsunami that is BlueTides, Di Matteo’s group has worked in close collaboration with the PSC team, including the Artificial Intelligence and Big Data group directed by PSC’s Paola Buitrago. Together these scientists have created COSMO, a data service platform that helps scientists minimize the amount of data they need to download from BlueTides — and to minimize the amount of computation their computers need to carry out. COSMO is hosted by Vera, the CMU Physics Department’s supercomputer hosted by PSC, as well as PSC’s flagship Bridges-2 system.

HOW PSC HELPED

BlueTides contains something like 50 petabytes of data. That’s 50,000 times as much as you’ll find in a high-end laptop’s storage. Clearly, downloading all that data to a scientist’s own computer is out of the question, even if that data could be transmitted in a reasonable amount of time (which is also difficult).

Part of COSMO, then, consisted of making the BlueTides data browsable, using a common set of parameters called REST API that scientists already knew. PSC’s flagship Bridges-2 could fill this need via its data transfer nodes, which had been designed from the beginning to handle massive amounts of data.

But importantly, COSMO went further. Even narrowed down, the relevant data can be a bear to handle and to compute on. Because of this, COSMO also offers an option for scientists to carry out their computations on Bridges-2 as well, using its powerful computational nodes to deliver answers to their queries.

“COSMO is a set of recommendations enabling a data set to be browsable over the Internet [and] downloadable … Something else that we enabled was being able to interact with that data via an API … You could use this entry point for saying, OK, I need this specific data from the data set or, using the data, compute something, and just give me the result that will allow me to save download time and setting up time. [You] just interact with the data without having to transfer it.”

— Julian Uran, PSC

Developed through the McWilliams Center for Cosmology 2020 Seed Grant to address the challenge of sharing and accessing massive scientific datasets, starting with the multi-terabyte BlueTides, COSMO consists of three key components:

- A web portal for browsing dataset structures at different granularity levels

- A REST API for programmatic data access

- A set of domain-agnostic recommendations for scientific data sharing

The API component is particularly powerful because it allows researchers to interact with terabytes of simulation data without requiring full downloads. Scientists can query specific subsets of data or request computed results directly through the API, dramatically reducing both transfer time and local storage requirements. This approach transforms how researchers access large-scale scientific datasets: instead of downloading entire simulations that might take days or weeks, they can get targeted results in minutes while maintaining the full analytical capabilities they need. The project successfully demonstrated proof of concept with the BlueTides Simulation and has since expanded to integrate additional datasets into the COSMO framework.

“By providing streamlined access, COSMO maximizes the impact of the BlueTides project and ensures its valuable data can be broadly utilized. Leveraging the storage and computational resources of PSC’s Bridges-2 system, the project also demonstrates an effective model for addressing a widespread need: hosting and serving large-scale scientific data to a broad research audience.”

— Paola Buitrago, PSC

COSMO has only begun to spool out answers for scientists’ questions — and recommendations on what questions to ask next, and in effect where to point the telescopes. The team presented a paper on users’ initial experiences with COSMO, with PSC’s Julian Uran as primary author, at the PEARC ’22 supercomputing conference. Their paper won the conference’s “Best Short Paper” award. Even better, a proposal based on COSMO data and results by Los Alamos National Laboratory Oppenheimer Postdoctoral Fellow Madeline Marshall and her team, then at the University of Melbourne in Australia, earned time in the Webb Telescope’s first observing cycle to observe the Universe’s earliest quasars.

COSMO, ironically, is about more than just the Universe. Its methods can be used to query and compute on any large data set. It’ll work just as well on analyzing sound signatures and frequencies of bird calls as on quasar formation. Future applications of the tool will include opening it up to other types of specialized knowledge that require searching through needle-in-a-needlestack data.